Video Coding Efficiency Enhancement by means of a Novel Representation of Video Material

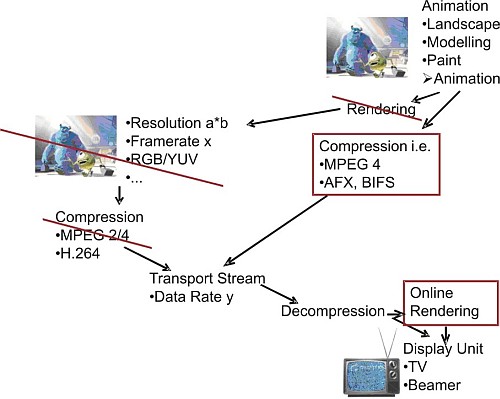

The processing chain of film production, coding, distribution and finally reproduction on the consumer display includes processing steps which suggest modifications. Not only fully animated movies but most of the films contain increasing amounts of animated content (i.e. special effects or non-real characters). Every animation is composed of 3D objects and rendered to be represented in frames. Within the rendering process, existing information of the 3D-models is lost and cannot easily be recovered. Block based motion estimation is done afterwards with high computational power for optimized data compression.

In a receiver or a display device, the data has to be decoded to frames with a rate defined by the transmitted or stored stream. In many cases, the characteristics of the video material and the display device do not match which introduces the need for spatial and temporal scaling algorithms which again consume significant computing power to minimize quality loss. Another fact is that more and more receivers, video game consoles and display devices are and will be equipped with graphic processing units which are not used for video decoding at the moment.

In this project we want to find out if it is beneficial to keep the original object based information of video material. The main differences between the conventional and the proposed video representation chain are shown in Fig. 1.

In addition to the basic differences mentioned above, some more benefits of the discussed video representation are as follows: The object based representation and the coherent rendering in the display opens up the possibility to directly include the properties of the display. Therefore no quality loss caused by interpolations is introduced. Another advantage of the object based representation is revealed with the upcoming 3D trend. If 3D content is stored or broadcasted, a certain number of views (at least two, e.g. nine for multi-view) have to be stored using the pixel based representation. Every additional view involves an overhead of ~55%. When using an object based representation only the properties for the added camera of each view have to be saved, which results in a negligible overhead.

Our research focuses on the possibilities of an object based representation of video data, the efficient implementation and the consequential enhancement in source coding.

Projektleiter

Ehem. Projektmitglieder

Dr.-Ing. J. Wünschmann