Projects

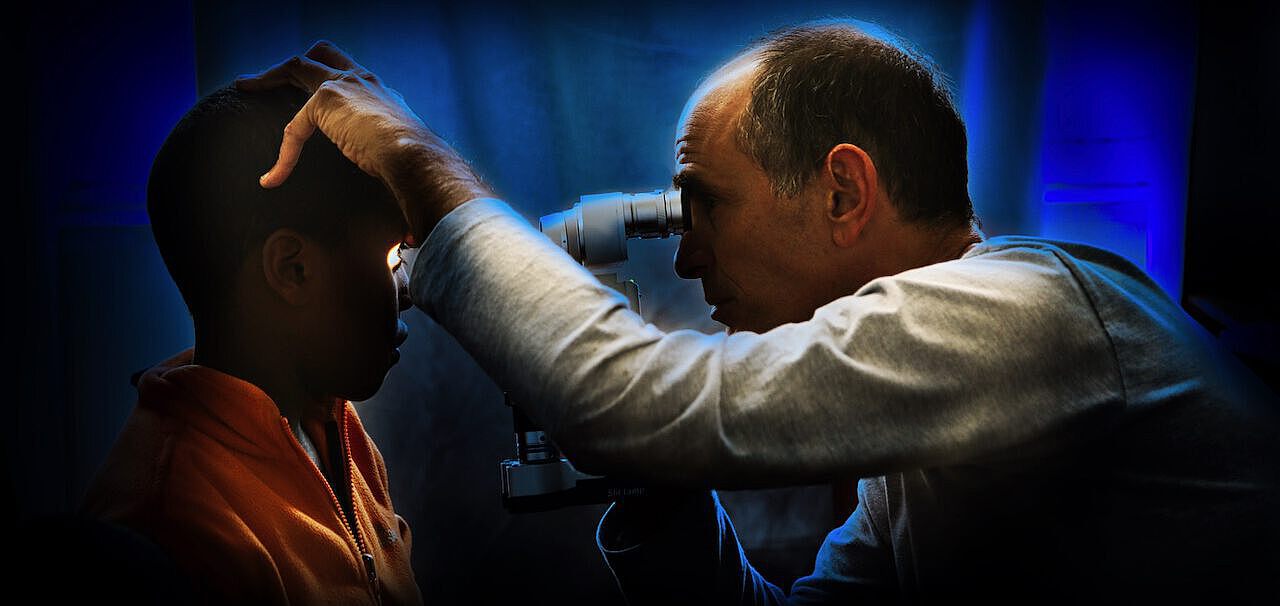

In this project we investigate how children learn to see after they were born blind (due to bilateral dense cataract) and operated only later in life opening their eyes. Many children like this live in developing or emerging countries with underdeveloped health systems. Our project is conducted in Ethiopia in collaboration with a team of doctors from Israel (Dr. Itay Ben Zion) and is funded by the German Research Foundation (DFG) in the DIP fremawork program.

More information can be found here.

Jedes autonom handelnde System – ob biologisch oder technisch – verfügt in der Regel über eine Reihe von Sensoren, deren Information integriert werden muss, um sinnvoll und zielgerichtet agieren zu können. Dabei nimmt es Information nicht nur passiv auf, sondern agiert idealerweise durch Eigenbewegung so, dass der Informationsgehalt zur Lösung einer bestimmten Aufgabe erhöht wird. Dabei fallen Ströme von Sensorinformation mit einer großen Menge von Daten an, die zumeist hochgradig redundant sind. Grund dafür ist, dass sich die Sensorinformation zu einem bestimmten Zeitpunkt zumeist nur unwesentlich von der unterscheidet, die kurze Zeit später detektiert wird. Nur Veränderungen (verursacht durch Ereignisse) liefern bedeutsame Information. Daher macht es auch Sinn, so wie es uns das neuronale Vorbild der Biologie vormacht, nur Veränderungen in den Datenströmen zu registrieren und zu verarbeiten. Durch diese ereignisbasierte Codierung wird die Energieeffizienz und die Spärlichkeit des Sensorsystems um ein Vielfaches gesteigert. Ziel dieses Projektvorhabens ist es daher, die ereignisbasierte Analyse sowohl visueller als auch auditiver Sensorinformation zu untersuchen und biologisch plausible Modelle der Informationsverarbeitung zu entwickeln. Ein Fokus liegt dabei auf der räumlichen Wahrnehmung und im speziellen auf der Analyse der Interaktion von Ereignissen, die extern bzw. durch Eigenbewegung (z.B. durch Augen- oder Körperbewegung) erzeugt wurden. Eine zentrale Frage dabei ist, wie die multisensorische Information der visuellen und auditiven Sensoren während der Eigenbewegung integriert werden. Entscheidend dafür wird es sein, den Zusammenhang der multisensorischen Ereignisse zu erkennen und die verschiedenen sensorischen Repräsentationen aufeinander abzustimmen. Hierbei wird die Detektion der Korrelation zwischen den Signalen, die Kompensation für Ereignisse erzeugt durch Eigenbewegung und das Lernen der Repräsentationen sowie deren Beziehung zueinander die entscheidende Rolle spielen. Um die Effizienz der sensorischen Verarbeitung und der multisensorischen Integration während der Eigenbewegung zu demonstrieren, sollen die gewonnenen, biologisch-plausiblen Verarbeitungsmechanismen in neuromorphen Algorithmen implementiert werden, um dann damit eine autonome Roboterplattform zu steuern, die eine multisensorische Suchaufgabe durchführen soll. Dabei wird getestet, inwiefern die Roboter-Kontrollalgorithmen vergleichbare Ergebnisse liefern, wie sie vom Menschen oder der Biologie allgemein bekannt sind. Dazu wird die zur Verfügung stehende Information in Quantität und Qualität variiert, sowie die Übereinstimmung der multisensorischen Informationsquellen manipuliert.

More information here.

User-adaptive systems are a recent trend in technological development. Designed to learn the characteristics of the user interacting with them, they change their own features to provide users with a targeted, personalized experience. Such user-adaptive features can affect the content, the interface, or the interaction capabilities of the systems. However, irrespective of the specific source of adaptation, users are likely to learn and change their own behavior in order to correctly interact with such systems, thereby leading to complex dynamics of mutual adaptation between human and machine. While it is clear that these dynamics can affect the usability, and therefore the design of user-adaptive systems, scientific understanding of how humans and machines adapt jointly is still limited. The main goal of this project is to investigate human adaptive behavior in such mutual-learning situations. A better understanding of adaptive human-machine interactions, and of human sensorimotor learning processes in particular, will provide guidelines, evaluation criteria, and recommendations that will be beneficial for all project within SFB/Transregio 161 that focus on the design of user-adaptive systems and algorithms (e.g. A03, A07, projects in Group B). To achieve this goal, we will carry out behavioral experiments using human participants and base our empirical choices on the framework of optimal decision theory as derived from the Bayesian approach. This approach can be used as a tool to construct ideal observer models against which human performance can be compared.

More information here.

Real world tasks as diverse as drinking tea or operating machines require us to integrate information across time and the senses rapidly and flexibly. Understanding how the human brain performs dynamic, multisensory integration is a key challenge with important applications in creating digital and virtual environments. Communication, entertainment and commerce are increasingly reliant on ever more realistic and inmmersive virtual worlds that we can modify and manipulate. Here we bring together multiple perspectives (psychology, computer science, physics, cognitive science and neuroscience) to address the central challenge of the perception of material and appearance in dynamic environments. Our goal is to produce a step change in the industrial challenge of creating virtual objects that look, feel, move and change like ‘the real thing’. We will accomplish this through an integrated training programme that will produce a cohort of young researchers who are able to fluidly translate between the fundamental neuro-cognitive mechanisms of object and material perception and diverse applications in virtual reality. The training environment will provide 11 ESRs with cutting-edge, multidisciplinary projects, under the supervision of experts in visual and haptic perception, neuroimaging, modelling, material rendering and lighting design. This will provide perceptually-driven advances in graphical rendering and lighting technology for dynamic interaction with complex materials (WP1-3). Central to the fulfillment of the network is the involvement of secondments to industrial and public outreach partners. Thus, we aim to produce a new generation of researchers who advance our understanding of the ‘look and feel’ of real and virtual objects in a seamlessly multidisciplinary way. Their experience of translating back and forth between sectors and countries will provide Europe with key innovators in the developing field of visual-haptic technologies.

More information here.

CrowdDNA is a radically new concept to assist public space operators in the management of crowds, i.e., mass event organization, heavy pedestrian traffic management, crowd movement analysis and decision support. CrowdDNA technology is based on a new generation of crowd simulation models, which are capable of predicting the dynamics, behaviour and risk factors of crowds of extreme density. The motion of dense crowds emerges from the combination of actions of individuals and the physical contacts and pushes between these individuals. The intense interactions present in dense crowds may turn into stampedes or fatal crushing. The accurate prediction of crowd behaviours induced by physical interactions is crucial to help authorities control such crowds and avoid fatalities. CrowdDNA will introduce the missing ‘nanoscopic’ scale of physical interactions in simulation models, in close relation to the specific large-scale macroscopic crowd behaviours. CrowdDNA brings together an interdisciplinary consortium with ambitious SMEs in the field of crowd management. Together, they will develop the models that capture those limb-to-limb contacts and pushes in simulations and to accurately predict the degree of crowd pressure and the risks of falling and crushing. The implications of this technology will be far-reaching. CrowdDNA is a first attempt to combine biomechanical and behavioural simulation in complex scenarios of interactions between many humans. It will revolutionize the practices of crowd management to answer the requirements of modern society on safety and comfort at mass events or in crowded transportation facilities. CrowdDNA builds the foundations of new research on crowds, and opens up new opportunities for studies on physical interaction across cognitive sciences and biomechanics, as well as robotics and autonomous vehicles for safe navigation among people.

More information here.

Navigation is a central ability of us humans, ensuring survival. Until now, the study of navigation has focused almost exclusively on the 2D horizontal plane even though our world is 3D. Our long-term goal is to understand and model human 3D navigation behavior. In the Applied Cognitive Psychology Group, we have the chance to conquer this new field of research as we have just set up a unique 3D locomotion platform akin to a flight simulator topped with a treadmill. This brings us into an exceptional situation to expand from 2D to 3D navigation, which is the unsolved question currently animating the community striving for experimental evidences and theoretical modeling. This project will help to kick off this new line of research and will then bring us into an excellent starting position to apply for a major grant on exploring and modelling human 3D navigation behavior including its applications in VR.

More information here.