Labor Infrastruktur

In the Applied Cognitive Psychology group we study human multisensory perception and action and for this we have a range of setups and lab infrastructure available. Our studies require virtual reality equimpent for visual stimulation, but also tools and robotiv devices for haptic interaction, techniques for audio stimulation and platforms to enable virtual locomotion, navigation and manual interaction. Below is a list of the major items available in the lab.

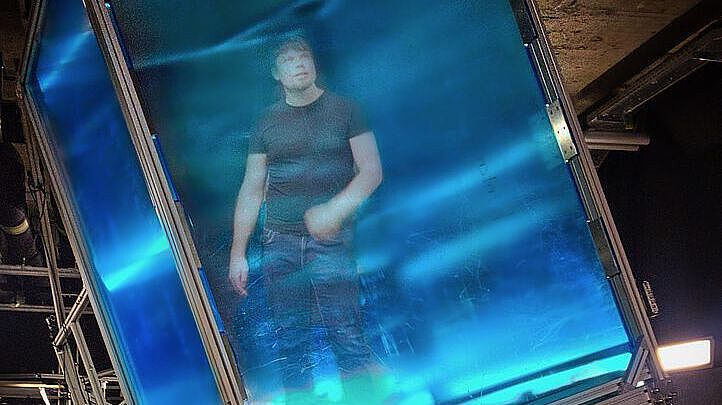

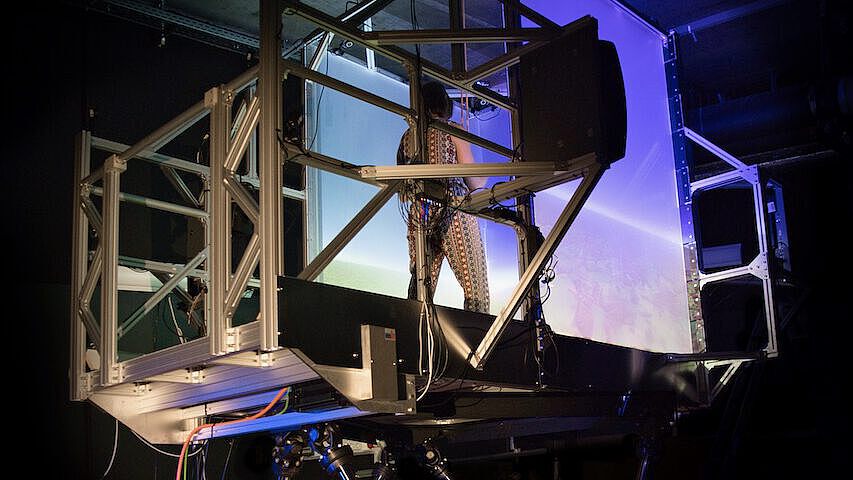

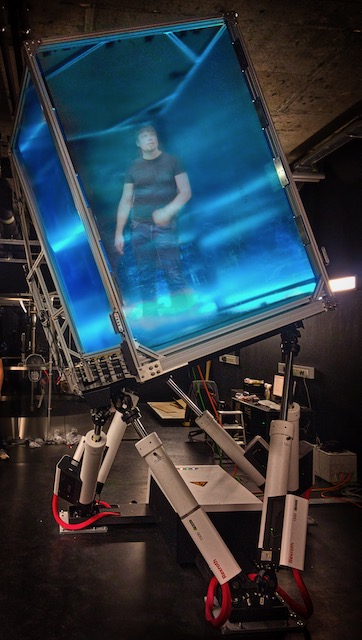

The Virtual Locomotion Setup consists of a 6DoF Stewart motion platform that is usually used for flight simulators and able to carry 1.5t of equipment. The platform is topped with a 2.5 m x 1.5m treadmill allowing people to freely walk. For visualization the treadmill is sourrounded by a 4-sided CAVE including optical tracking for controlling the vizualisation and the movement of the platform plus treadmill. More info here.

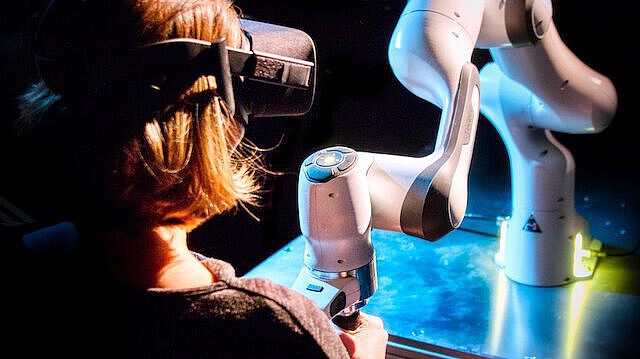

The Robo Lab consists of a 7DoF Panda Robot arm from Franka Emica. This is a soft robot designed for Human-Robot- Interaction. The robot arm is used to study sensorimotor learning, human computer collaboration, and men-machine interaction. More information here.

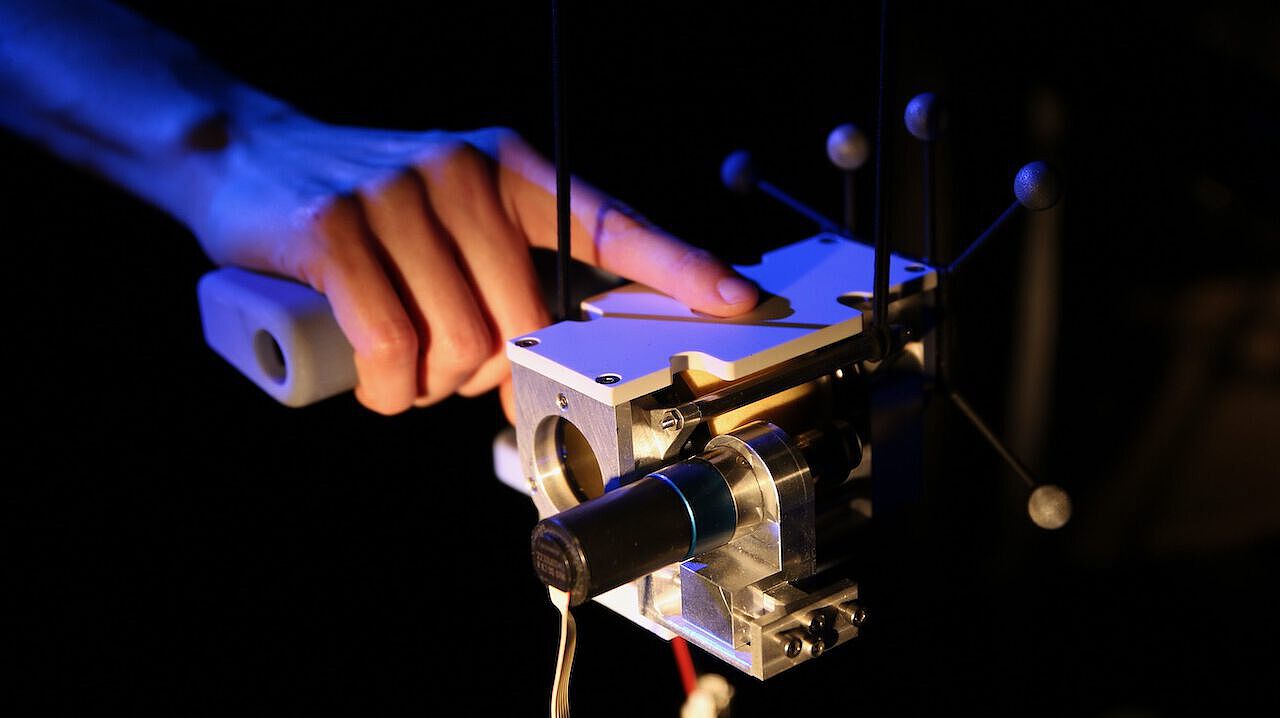

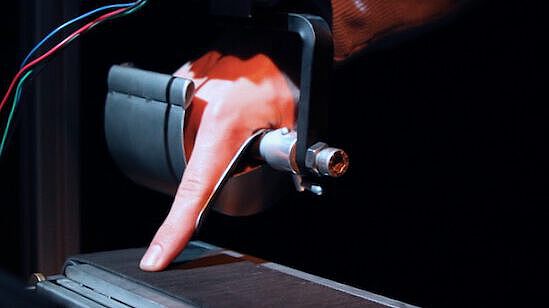

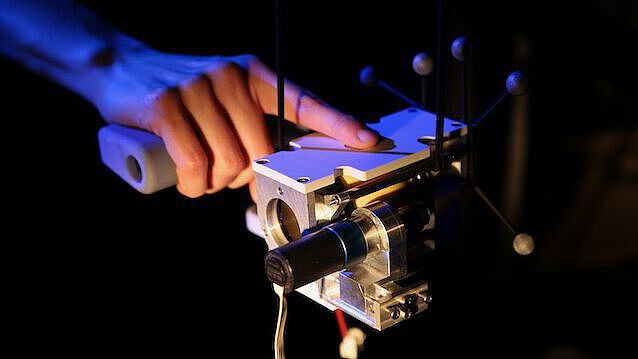

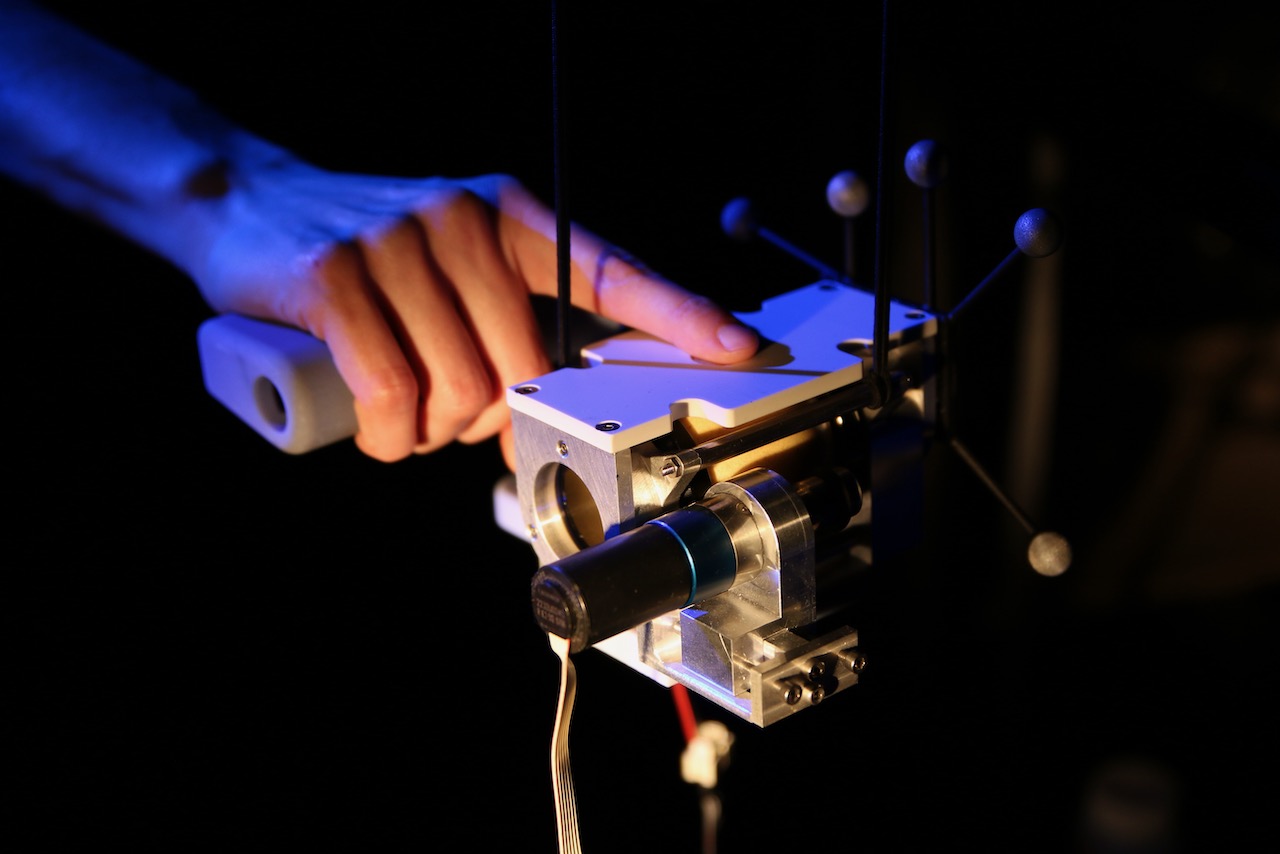

In reasearch touch is highly underrepresented compared to for example vision and audition. The main reason is that there are only few devices to virtualize haptic stimuly that would allow to study the sense of touch in a systematic and controlled fashion. And this depite the fact that hapics is an emerging field in industy and for application, such as smart watches, smart phones, virtual reality, or the automobile industy, to name a few. To this end, we designed and custom built a range of haptic interaction devices, while we also rely on some of the few commencial producs in our experimentaion. Fore more information see here.

Here we use Virtual Reality setups to study human navigation perfprmance. More infromation here.

We study the interaction of different cues for dynamic spatial perception in audition, such as the time difference between the ears (ITD), the intensity difference between the ears (ILD), or the spectral cues such as the head related transfer function (HRTF), the Doppler effect, or dispersion. To this end we use simulated cues via headphones or the placment of real speakers in 3D (either fixed or in motion). More information here.

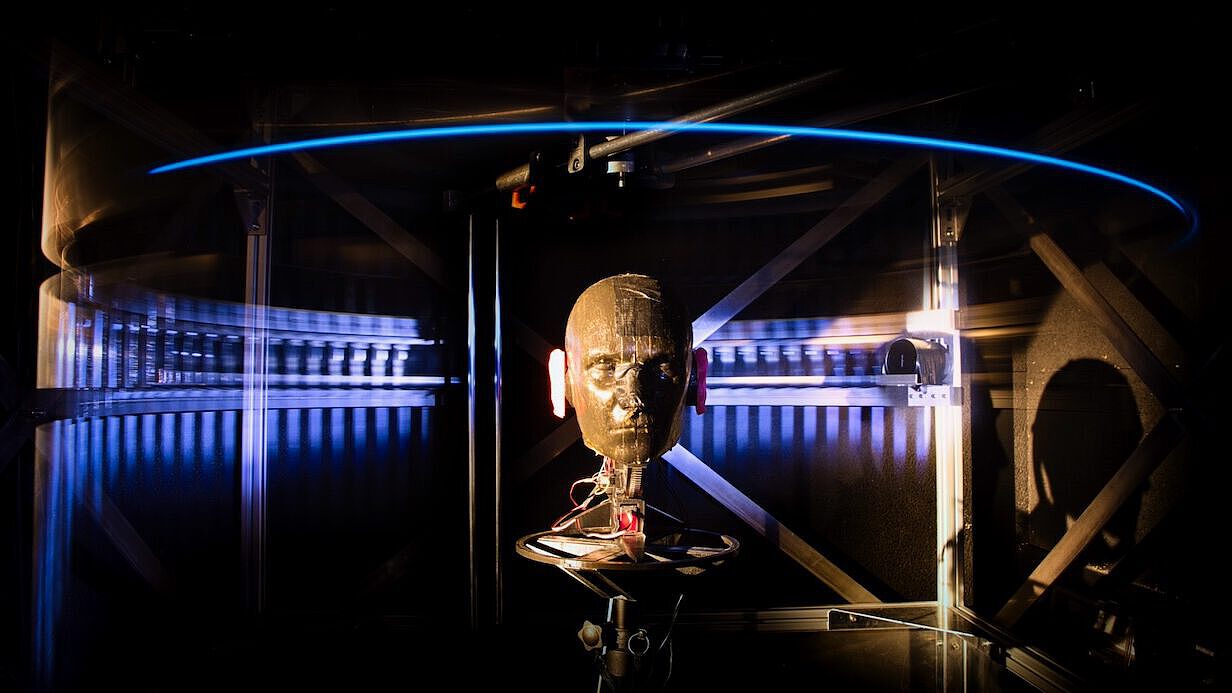

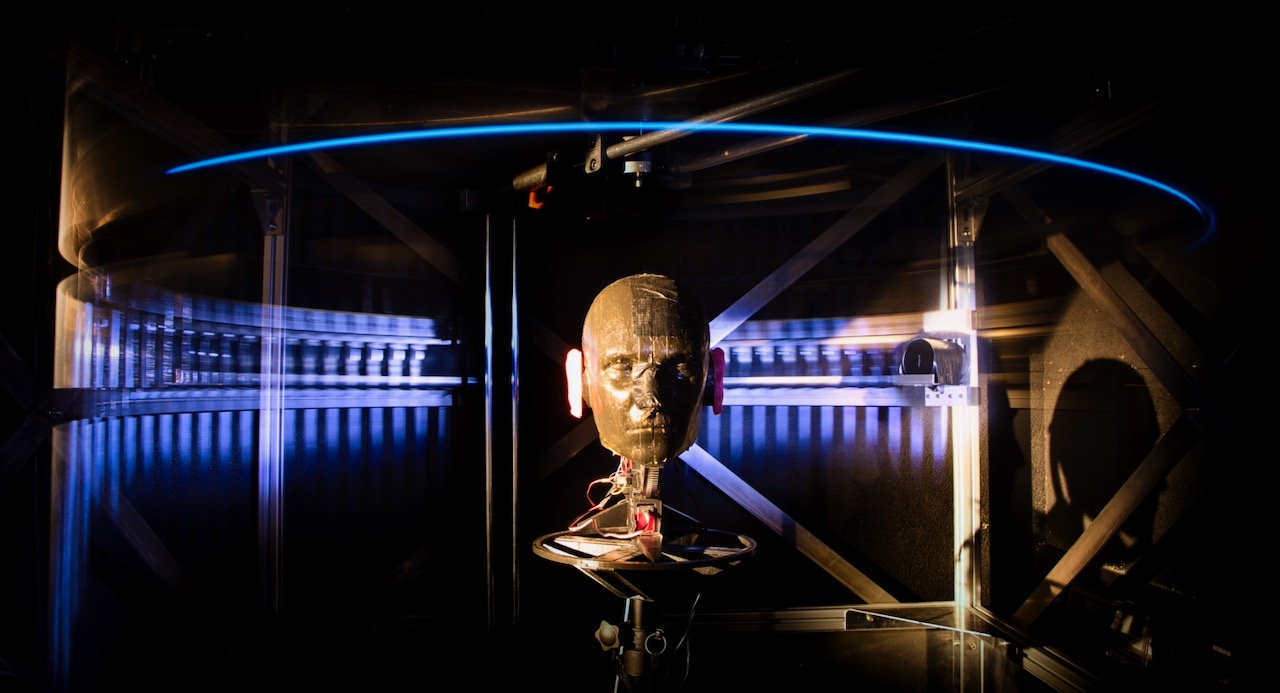

We use an artificail head movalble in pitch and yaw to track aufible objercts. The head has customizalbe erars to alter the head related transfer function (HRTF). For more infromation click here.