Schriftenreihe des Instituts für Nachrichtentechnik

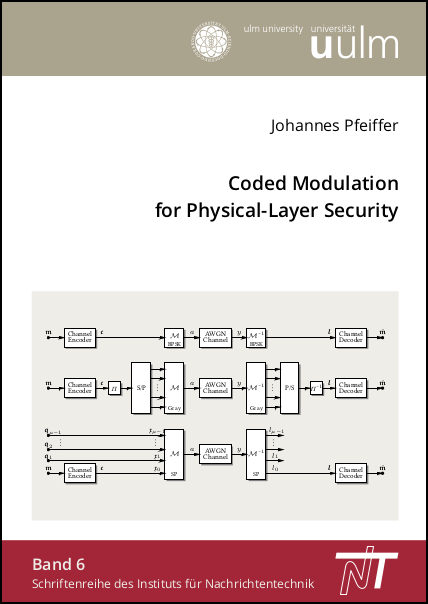

Dr.-Ing. Johannes Pfeiffer

When communicating secret messages in the presence of eavesdroppers, reliability and security have to be assured. In digital communications, the former is commonly subject to channel coding, whereas the latter is handled in higher layers of the protocol stack. There, classical cryptography is applied, which yields computational security based on secret keys, the difficulty of mathematical problems, e.g., prime factorization, and limited computational resources of potential eavesdroppers.

In contrast, physical-layer security (PLS) schemes are deployed at the lowest layer of the Open Systems Interconnection (OSI) model. Thereby, properties of the physical communication channel can be exploited to prevent successful eavesdropping without the application of secret keys. The design of coding schemes can incorporate constraints regarding both reliability for the legitimate receiver and security against eavesdroppers.

In this thesis, practical PLS coding and modulation schemes are examined and optimized for a Gaussian wiretap channel scenario. Their performance is assessed by the BER-based security gap metric. It quantifies the SNR advantage of a legitimate receiver has to have over potential eavesdroppers to achieve a secure and reliable transmission.

Secure coding schemes based on LDPC and polar codes are studied in combination with binary and higher-order signal constellations. For the latter, different coded modulation schemes yield a wide range of possible secure transmission rates and security metrics. Multi-level coding, where only the lowest bit level carries secret messages, exhibits the most favorable security performance. The influence of the signal constellation, especially its cardinality and dimensionality, on the secrecy metric is assessed. Moreover, the effects of signal shaping are examined.

Scrambling schemes, which are an essential part of most PLS coding schemes, are thoroughly assessed. Error propagation in the descrambling process, which has to be performed to recover the communicated secret messages, leads to favorable BER curves, which are characterized by sharp transitions from high-BER to low-BER operating points. Optimum schemes for shift-register-based scrambling and matrix-based scrambling are identified. The latter enables an equal protection of communicated message symbols, a feature most PLS coding schemes are missing.

The presented schemes are motivated by theoretical assessments of error propagation, capacity curves, and asymptotic performance of channel codes. Numerical simulations of practical code realizations cover the theoretical findings. Thereby, various possibilities for trade-offs between secure transmission rate and secrecy metric are laid out.

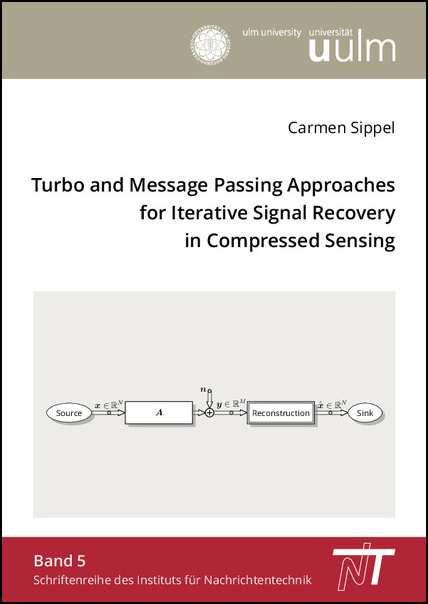

Dr.-Ing. Carmen Sippel

The thesis at hand deals with recovery algorithms for the reconstruction of signals from noisy and linearly compressed measurements. The procedure of linearly compressing a signal with known structure is known as compressed sensing (CS); the recovery is possible under certain conditions.

Meanwhile, there is a number of algorithms that are designed for this kind of task; each with own benefits and drawbacks. The thesis focuses on algorithms that can be derived from a suitable processing in a factor graph, which embodies the structural dependencies of the CS problem. This approach already leads to a variety of algorithms.

The most compartmentalized factorization leads to a form of the factor graph from which the so-called message passing (MP) algorithm can be derived. The opposite case, a factorization with fewest possible factors results in a graph with two factors, yielding Turbo-type algorithms that iterate between those two factors. In the thesis, both types of algorithms are derived from the respective factor graph by constrained optimization of the Kullback–Leibler divergence between the posterior and a suitably adapted substitute distribution, i.e., the respective algorithms are reconciled as instances of the same basic idea.

The thesis also comprises sequential algorithms, which are derived from factorizations of the problem that lie in between the two extreme cases explained above. The resulting algorithms can be seen as sequentialized versions of the Turbo-type algorithms. There are two possibilities. One way to sequentialize the algorithm is by iterating through the measurements; the other is to iterate through the signal components (additionally to iterating between the two main parts). The sequentialization allows for various schedules, which are examined in numerical simulations.

Furthermore, an estimation-theoretic view is cast on the processing between the factors. The result reveals possibilities for improvement of the algorithms. Several variants of the algorithms are proposed and compared in numerical simulations.

Dr. -Ing. Felix Frey

In communication theory, discrete-time end-to-end channel models play a fundamental role in developing advanced transmission and equalization schemes. Most notable, the discrete-time linear, dispersive channel with additive white Gaussian noise (AWGN) is often used to model point-to-point transmission scenarios. In the last decades, numerous transmission methods for such linear channels have emerged and are now applied in many digital transmission standards. With the advent of high-speed CMOS technology, those schemes have also been adopted in applications for fiber-optical transmission with digital-coherent reception.

Many of the applied techniques (e.g., coded modulation, signal shaping, and equalization) are still designed for linear channels whereas the fiber-optical channel is inherently nonlinear. A channel model which obtains the (discrete-time) output symbol sequence from a given (discrete-time) input symbol sequence by an explicit input/output relation is highly desirable to make further advances in developing strategies optimized for fiber-optical transmission.

In the past two decades, considerable effort was spent developing channel models for fiber-optical transmission with good trade-offs between computational complexity and numerical accuracy. Most of the early work is, however, concerned with the phenomenology in the optical domain alone, i.e., both the source and the effect of fiber nonlinearity is studied in the continuous-time, optical domain not considering the transmitter and/or receiver front-ends. Here, one promising strategy is, e.g., based on the so-called perturbative approach where non-linear effects are considered as small perturbations to the optical signal. By now, the optical community is faced with a vast number of models based on the perturbation premise, each model with its own assumptions, simplifications, and objectives. The connection between already existing models using complementary views (e.g., one in time-, the other in frequency-domain) is often unclear. A detailed and rigorous derivation for some prominent models is still pending. E.g., the transition from the original continuous-time to the more relevant discrete-time end-to-end model lacks a comprehensive, system-theoretic analysis.

Hence, in this dissertation, nonlinear fiber propagation is assessed from a systems-theoretic point of view with applications for communication systems. Based on the theory of nonlinear systems, the present work aims to connect the dots between various, existing channel models, unifying and comparing the different approaches. To that end, the perturbation approach in continuous-time is revisited with a special emphasis on the dual representations of nonlinear systems in time and frequency. From that, a discrete-time end-to-end fiber-optical channel model is derived which includes the transmit-side pulse shaping, the receive-side matched filtering, and T-spaced sampling. As before, two complementary representations of the now time-discretized end-to-end model are present—one in (discrete) time domain, the other in 1/T-periodic continuous-frequency domain. The time-domain formulation coincides with the well-known pulse-collision picture. The novel frequency-domain picture incorporates the sampling operation via an aliased and hence 1/T-periodic formulation of the nonlinear system. This gives rise to an alternative perspective on the end-to-end input/output relation between the spectrum of the discrete-time transmit symbol sequence and the spectrum of the receive symbol sequence. Both views can be extended from a regular, i.e., solely additive model, to a combined regular-logarithmic model to take the multiplicative nature of certain distortions into consideration. A novel algorithmic implementation of the discrete and periodic frequency-domain model is presented. The derived end-to-end model requires only a single computational step and shows good agreement in the mean-squared error sense compared to the oversampled and inherently sequential split-step Fourier method.

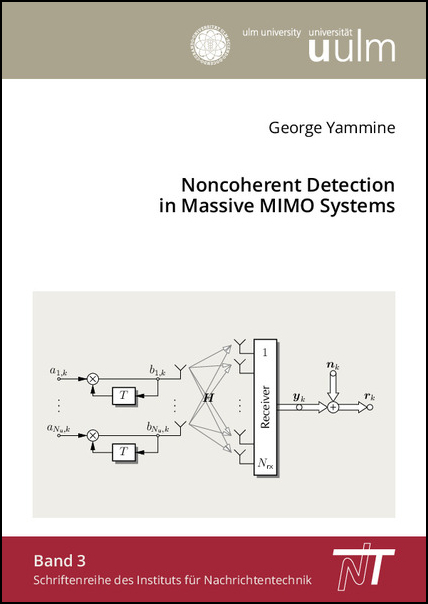

Dr. -Ing. George Yammine

Massive multiple-input/multiple-output communication systems are a great solution to satisfy the demand of high-throughput, reliable information transfer, without the need of increasing the required frequency spectrum. In such systems, the base stations are equipped with a very large number of antennas in comparison to the number of users served. Using linear techniques, such as maximum-ratio combining or zero-forcing linear equalization, the effective channels between the base station and the users become almost deterministic, an effect known as channel hardening, even though the actual channel realizations are random.

However, to reap these benefits, accurate estimates of the channel coefficients are required, a problem which is very challenging, due to the very large number of these coefficients. Additionally, when pilot-sequence-based techniques are applied, the estimates may suffer from additional interference due to the pilot contamination problem, that arises from the reuse of the limited number of these sequences in the multi-cellular environment.

To completely avoid these aforementioned drawbacks, noncoherent detection approaches in massive MIMO systems were proposed. Using differentially-encoded transmit data, and high-performing detection algorithms at the receiver, results that compete with the conventional coherent detection schemes that are typically employed in these systems are achieved. Moreover, only statistical knowledge of the situation, and not the actual conditions, are required. However, a problem still remains.

With the hundreds, if not more, radio front-ends, the hardware may become impractical to implement using the state-of-the-art components that are typically designed for single-antenna, or multi-antenna systems that employ only a handful of them. Additionally, to obtain the competitive results, processing is performed on large-dimensional data. This adds to the operational costs, as powerful signal-processing hardware becomes mandatory, when, e.g., latency is critical.

Hence, in this dissertation, the design of noncoherent receivers is explored. In the first part, the goal is achieving the best power efficiency possible. This includes improvements in the various steps of the receiver; starting with the antennas, and the radiation pattern they exhibit, the feedback gain in the detection algorithms, the metric utilized to decide which symbols are the most reliable, and decode them, and the combination of differential encoding with error-correction codes to reduce the error rates further. These advanced receiver concepts highlight the potential of noncoherent detection in massive MIMO systems.

In the second part of the dissertation, the main focus lies on reducing the algorithmic and hardware complexity of the noncoherent massive MIMO receiver. First, low-complexity alternatives or implementations are adopted for particular computationally-demanding processing tasks, e.g., the SVD for subspace tracking, at the receiver. Next, the structure of the different matrices involved in the detection process are exploited to reduce the numerical complexity from the start. Then, low-resolution analog-to-digital converters are investigated and optimized, to obtain as-good results as the unquantized case. Finally, the special case of one-bit quantization is studied, with the accompanying derivations to obtain a quantization-aware receiver. This entails a solution for acquiring the statistical knowledge needed for detection at the receiver. In contrast to coherent detection employing one-bit converters, it can be proven that at a very high signal-to-noise ratio, it is possible for noncoherent detection to have no error floor.

The theoretical insights in this work are supported by numerical results obtained from Monte--Carlo simulations. Additionally, when possible, analytic expressions of the required complexity are derived.

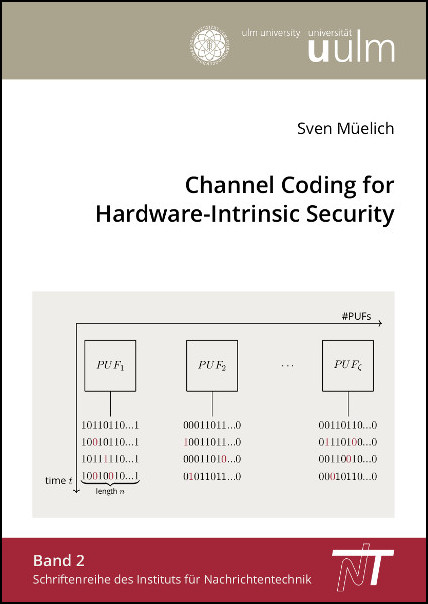

Dr. rer. nat. Sven Müelich

Hardware-intrinsic security studies cryptographic methods, whose implementations are assisted by some specific physical properties of the hardware on which they are executed. Physical Unclonable Functions (PUFs) are a predominant part of that field and currently an active research area. The most investigated type of PUF is the so-called silicon PUF, representing an electronic device, which is embedded in an integrated circuit (IC) with some cryptographic functions. PUFs are used to generate a highly secret, time-invariant, true random bit sequence, referred to as PUF response. This randomly generated PUF response is unique for each individual PUF device and can easily be reproduced on request inside the IC over its entire lifetime. The PUF response is derived from the inherent randomness of some physical properties occurring from variations in the IC manufacturing process. These variations cannot be controlled with todays technologies. For example, the propagation delay of logic gates or the initialization state of memory cells can be used in order to generate a PUF response. Since such behaviors cannot be controlled, it is extremely unlikely to produce two PUFs with the same response. This is the reason why PUFs are called unclonable. Even the IC manufacturer cannot predict the individual response of an embedded PUF without performing a readout after IC manufacturing. If the IC manufacturer prevents the possibility to readout a PUF response in any way, not even by using any kind of IC tampering, the PUF response becomes secret to everyone.

Since PUFs can be interpreted as highly secret, true random bit sources, they are predestined for a variety of cryptographic applications such as, for example, secret key generation and storage, identification and authentication of various entities. A PUF response exists in its binary form only for a very short active time period during execution of the cryptographic function in which it is involved. Otherwise, in predominantly inactive periods, it is hidden in its analog form, consisting of unclonable analog physical parameter values of the PUF device. Every attempt to manipulate these parameter values uncontrollably changes the binary PUF response. Consequently, the PUF response is inseparably merged with the IC hardware and it is not possible to reconstruct its binary value during inactive periods. In the very short active periods, when the PUF response exists in its binary form, its secret can be protected by additional methods. Due to external influences like changes of temperature, supply voltage or IC aging, many PUF variants cannot reproduce their binary responses error-free. For such error-prone PUFs, methods from the field of error-correcting codes have to be applied to reliably reproduce binary PUF responses.

In current applications, however, all PUF types are only equipped with classical errorcorrecting codes, which are not tailored to the specific properties of individual PUF types. Consequently, the possibilities of reliability improvements of error-prone PUFs are not completely exhausted.

This dissertation considers several aspects of PUFs from the perspective of coding theory. Traditionally, for error correction in PUFs, a worst-case bit error probability is used in order to model the binary symmetric channel. As existing results in the literature indicate, this is a very conservative and sometimes even pessimistic assumption. In the theory of error-correcting codes, knowing characteristics of a channel is always beneficial in order to design codes that lead to an improvement of the error-correction performance. We derive channel models for two different PUF variants, namely Ring Oscillator PUFs (ROPUFs) and Dynamic Random Access Memory (DRAM) PUFs. Using DRAM to construct PUFs is a comparatively new approach proposed in the literature. In contrast to the established variants, PUF responses extracted from DRAM are heavily biased towards either “0” or “1”, and hence, debiasing methods have to be applied in addition to error correction. We propose methods that can be applied to solve the debiasing problem.

When dealing with noisy responses, secure sketches are a widely used concept. When reproducing a PUF response based on an erroneous re-extracted response, so-called helper data which are calculated and stored during initialization have to be used to map responses to codewords, such that decoding algorithms can be applied. We propose and analyze a new secure sketch that only uses an error-correcting code, but no further helper data. Also, we use our channel model, which we derived for ROPUFs, to construct new secure sketches.

Furthermore, we propose specific code constructions that can be used for error correction in the context of PUFs. Block codes and convolutional codes are considered for that purpose and we explain how to improve existing results from literature by using code classes (Reed–Muller codes, Reed–Solomon codes), decoding techniques (generalized minimum-distance decoding, power decoding, list decoding, using soft information at the input of the decoder, sequential decoding) or coding techniques (generalized concatenated codes), that have not been applied to PUFs before. Our code constructions result in a smaller block error probability, decoding complexity or codeword length in comparison to existing implementations.

The final part of this dissertation deals with security aspects. In particular, we consider timing attacks on the decoding algorithm, as a representative of the huge family of side-channel attacks. We study two techniques to prevent such attacks, namely a masking technique, as well as a modified decoding algorithm with a runtime that is constant and independent of the received word.

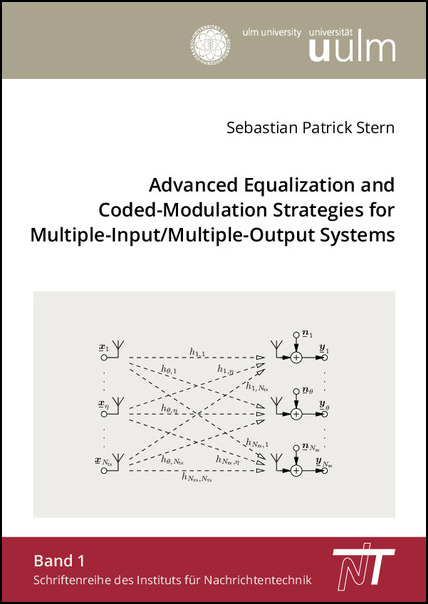

Advanced equalization and coded-modulation strategies for multiple-input/multiple-output (MIMO) communication are considered. The focus is on techniques that are suited for the application in multiuser MIMO uplink transmission (MIMO multiple-access channel) or multiuser MIMO downlink transmission (MIMO broadcast channel). This particularly includes lattice-reduction-aided (LRA) schemes which have become popular in recent years.

In LRA schemes, the MIMO channel matrix is factorized into two parts: a unimodular integer matrix and a residual non-integer matrix. Given that factorization, only the non-integer part is conventionally equalized, either by means of linear equalization or the application of the principle of successive interference cancellation (SIC). In contrast to that, the integer interference can be resolved without any performance-harming noise enhancement. From a mathematical point of view, the integer matrix describes a change to a more suited basis for channel equalization. Consequently, the channel factorization can be obtained by well-known lattice-basis-reduction algorithms, e.g., the Lenstra–Lenstra–Lovász (LLL) algorithm. However, concentrating on the treatment of the multiuser MIMO interference, LRA schemes have most often been treated uncoded, i.e., neglecting the combination with a convenient coded-modulation approach. This situation has changed with the concept of integer-forcing (IF) equalization. In IF schemes, the channel matrix is factorized, too. Nevertheless, the integer interference is resolved over the finite field of the channel code—creating a close coupling between channel equalization and coded modulation. For the finite-field integer matrix, the unimodularity constraint as present in LRA schemes can be relaxed to a full-rank constraint. This not only brings up the question if, in classical LRA schemes, the unimodularity constraint is really necessary, but also if the LRA techniques have really been operated in an optimum or at least in a close-to-optimum way.

Hence, in this thesis, strategies and approaches are identified that enable a performance gain over the state-of-the-art application of LRA receiver- or transmitter-side equalization. First, this involves the choice of the signal constellation. In particular, constellations over the Eisenstein integers—the hexagonal lattice over the complex plane—are studied. These signal constellations as well as conventional quadrature amplitude modulation (QAM) ones are combined with coded-modulation schemes that are suited for the application in multiuser MIMO communications using binary or non-binary low-density parity-check (LDPC) codes. Moreover, criteria and algorithms for lattice basis reduction are reviewed and extended for lattices over Eisenstein integers. These considerations also include the abovementioned relaxation to full-rank integer matrices, which is specifically known as successive minima problem. A recapitulation of conventional linear and SIC-based equalization schemes is provided, where the famous V-BLAST detection strategy is regarded from the perspective of lattice theory. Following this, optimum or close-to-optimum channel factorization strategies and related algorithms are worked out for LRA transmitter- and receiver-side schemes. It is shown that the classical unimodularity constraint can indeed be relaxed—generalizing the “lattice-reduction-aided” to “lattice-aided” (LA) schemes. The combination of these LA approaches with coded-modulation strategies is studied and the differences to the corresponding IF schemes are clarified; a discussion on the convenience of both philosophies in multiuser MIMO uplink and downlink transmission is given. The theoretical derivations in this thesis are supported by results obtained from Monte-Carlo simulations. This particularly includes the evaluation of the transmission performance if binary source symbols are transmitted.