Object-Based Video Compression for Real World Data

This project aims to explore the possibilities of improving coding efficiency for real world video material by faciliating depth information.

All modern hybrid video codecs utilize spatial and temporal redundancy removal to compress video sequences. In order to reduce the data necesssary to properly describe a certain area of an image, the similarity to a reference image is exploited. This process is one of the central elements of each hybrid video codec and is called prediction. One can expect the enhancements of traditional hybrid 2D video compression to settle in the near future. Therefore new concepts need to be explored.

This project focuses on a different method of data representation which is based on 3D models. The novel approach should cope with some shortcomings of traditional hybrid video encoders, such as camera rotation, zooming operations and reappering of already known, but meanwhile occluded parts of the scene.

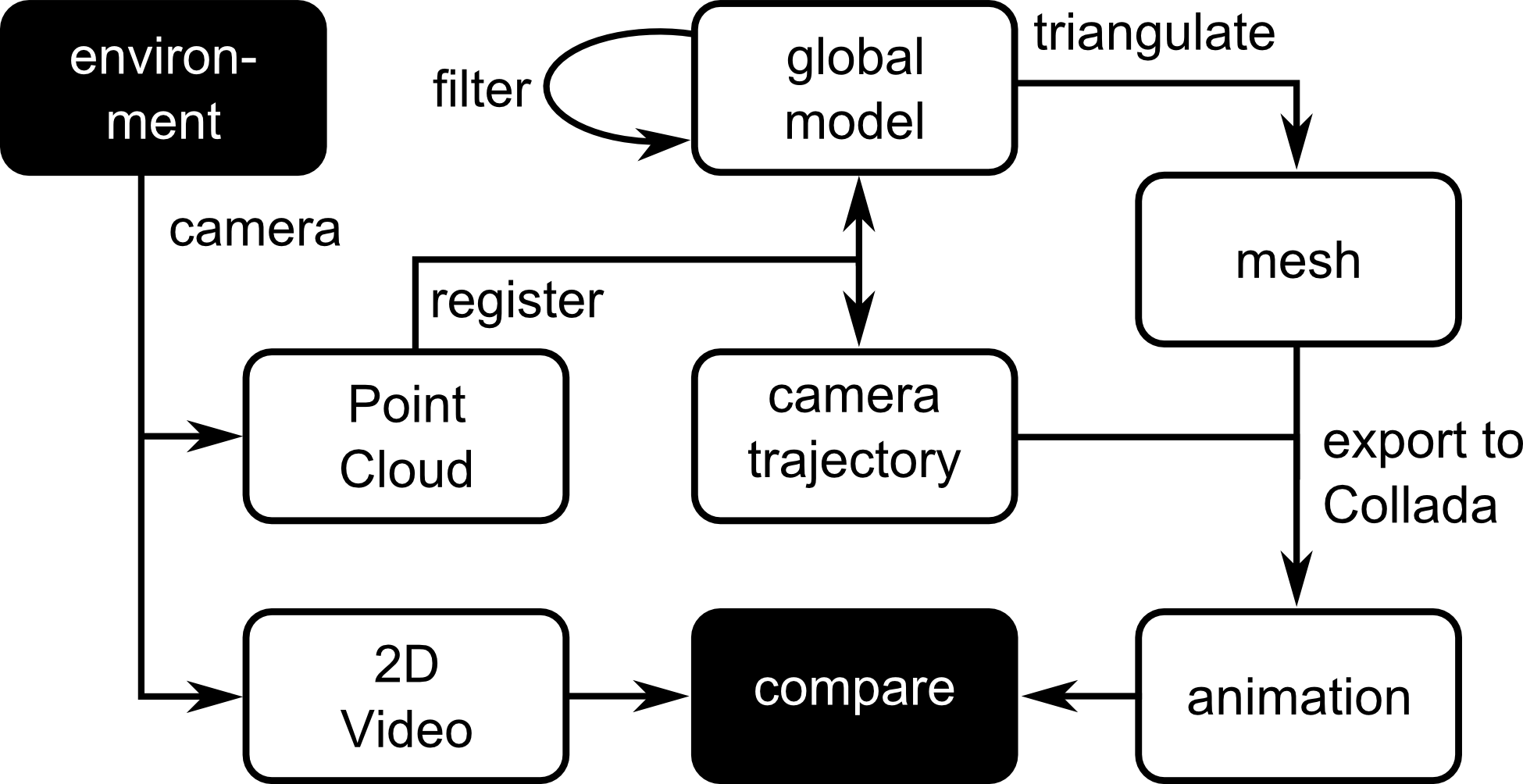

The goal of this work is the development of a framework to transfer real world data into a 3D model based representation and to further compress this model. Figure 1 shows an overview of the performed steps during a model-based encoding process. These steps need to be accompanied by quality comparisons against the conventional 2D encoding technique in order to determine the validity of this approach.

The well-known Microsoft Kinect camera delivers both A 2D video as well as a depth based 3D representation of the environment. The open source Point Cloud Library (PCL, C++ ) is used to aquire and process the 3D data. After the conversion into a mesh based 3D model, the data can be passed to the MPEG-4/Part 25 encoder. After decoding the 3D data the content can be rendered and the visual quality can be compared to the also aquired 2D data.

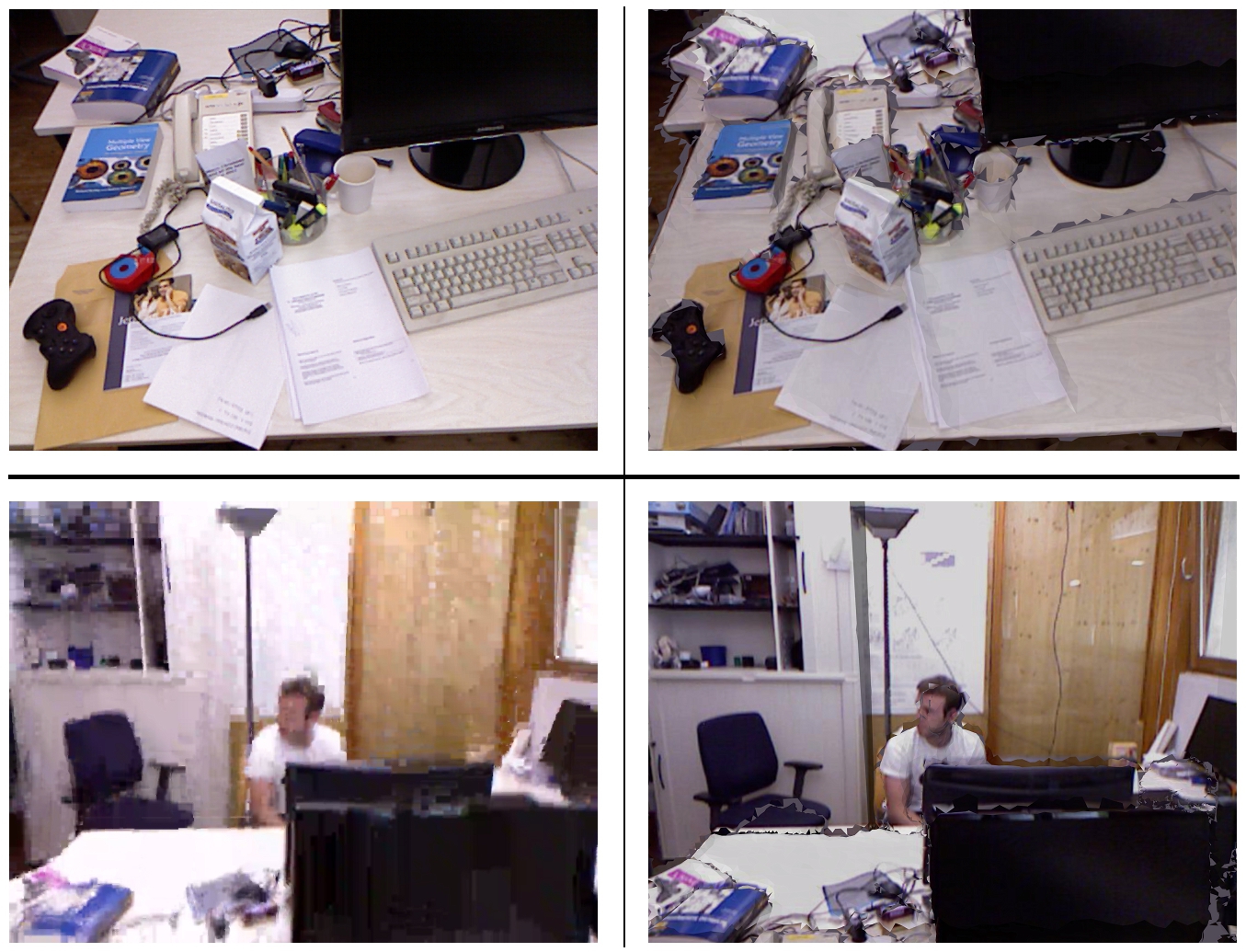

Figure 2 shows sample pictures of encoded video streams for hybrid and model-based video codecs. Both images at the top row show a high quality scenario at comparable data rates. The bottom row shows encoded frames of the same codecs at lower, but compoarable data rate.

Central questions to be answered:

- how accurate can the 3D representation be?

- how well performs this approach by means of encoding efficiency?

- is it by any means an improvment over hybrid video encoding?

- which problems occur, when switching from an static offline scenario (whole scene is known a-priori) to a dynamic online scenario (3D model is updated with every new frame)

Projektleiter

Projektmitglieder

Dipl.-Ing. C. Feller