AREA - Augmented Reality Engine

Project Description

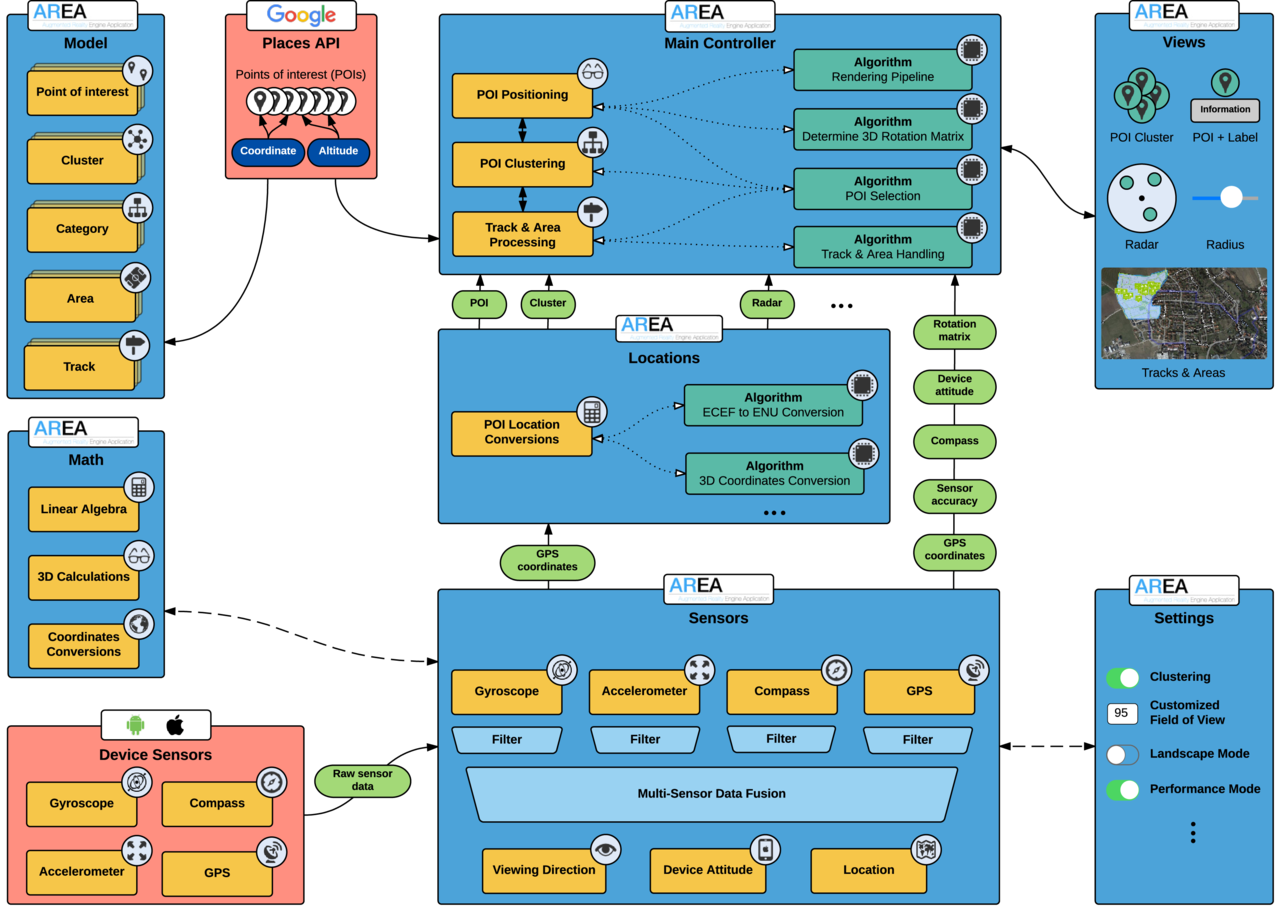

AREA is a fully functional and customizable engine enabling augmented reality on smart mobile devices and available for Apple iOS and Android. Points of Interest in the user’s surrounding are displayed at the corresponding position of their GPS location in the camera view and are prepared for touch events, so further information can be inserted. Since necessary calculations, reading out sensors and drawing of points on the screen are well implemented, AREA uses as little battery and processor power as possible, however, as much as possible to draw the screen without lagging. Due to the modular architecture it is possible to integrate third party applications and to build new applications upon AREA. The strict partition of internal components makes AREA highly adaptable and maintainable.

AREA’s augmented reality view contains a radar displaying all POIs in the user’s surrounding inside a specified radius and shows the user’s current field of view. The radar also indicates the orientation relative to north. The actual POIs are drawn on top of a real time camera preview and are placed at the corresponding position of their GPS location as well as their altitude. Due to the modularity of AREA, the appearance of the user interface elements of both the camera view and map view can be easily adjusted.

AREA V2

During the last years, the computational capabilities of smart mobile devices have been continuously improved by hardware vendors, raising new opportunities for mobile application engineers. Mobile augmented reality is one scenario demonstrating that smart mobile applications are becoming increasingly mature. In the AREA (Augmented Reality Engine Application) project, we developed a kernel that enables such location-based mobile augmented reality applications. On top of the kernel, mobile application developers can easily realize their individual applications.

The kernel, in turn, focuses on robustness and high performance. In addition, it provides a flexible architecture that fosters the development of individual location-based mobile augmented reality applications. In the first stage of the project, the LocationView concept was developed as the core for realizing the kernel algorithms. This LocationView concept has proven its usefulness in the context of various applications, running on iOS, Android, or Windows Phone. Due to the further evolution of computational capabilities on one hand and emerging demands of location-based mobile applications on the other, we developed a new kernel concept.

In particular, the new kernel allows for handling points of interests (POI) clusters or enables the use of tracks. These changes required new concepts presented in this paper. To demonstrate the applicability of our kernel, we apply it in the context of various mobile applications. As a result, mobile augmented reality applications could be run on present mobile operating systems and be effectively realized by engineers utilizing our approach. We regard such applications as a good example for using mobile computational capabilities efficiently in order to support mobile users in everyday life more properly.

Project Details

| Ulm University, Institute of Databases and Information Systems |

| Philip Geiger |

| Ulm University, Institute of Databases and Information Systems |

| Ulm University, Institute of Databases and Information Systems |

| Ulm University, Institute of Databases and Information Systems |