Student Projects - Human-Computer Interaction, Prof. Dr Rukzio

2022

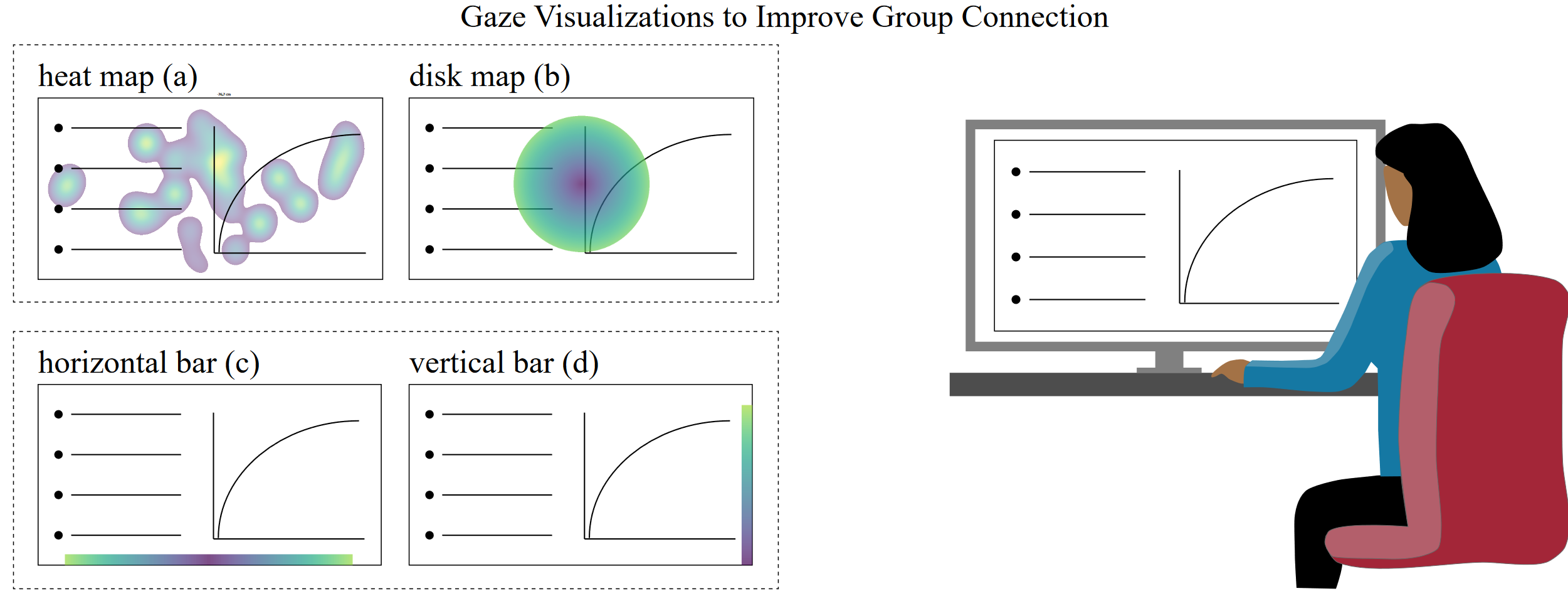

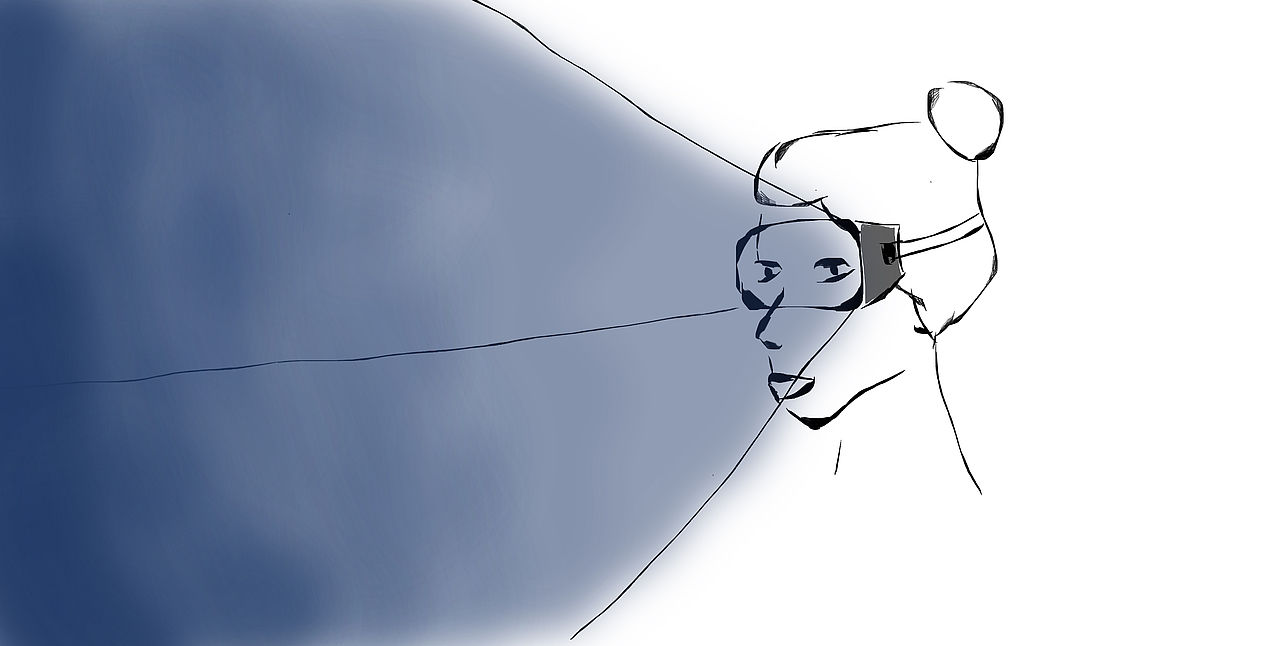

Attention of Many Observers Visualized by Eye Movements

Interacting with a group of people requires to direct the attention of the whole group, thus requires feedback about the crowd's attention. In face-to-face interactions, head and eye movements serve as indicator for crowd attention. However, when interacting online, such indicators are not available. To substitute this information, gaze visualizations were adapted for a crowd scenario. We developed, implemented, and evaluated four types of visualizations of crowd attention in an online study with 72 participants using lecture videos enriched with audience's gazes. All participants reported increased connectedness to the audience, especially for visualizations depicting the whole distribution of gaze including spatial information. Visualizations avoiding spatial overlay by depicting only the variability were regarded as less helpful, for real-time as well as for retrospective analyses of lectures. Improving our visualizations of crowd attention has the potential for a broad variety of applications, in all kinds of social interaction and communication in groups.

2021

Autonomous vehicles provide new input modalities to improve interaction with in-vehicle information systems. However, dueto the road and driving conditions, the user input can be perturbed, resulting in reduced interaction quality. One challenge is assessing the vehicle motion effects on the interaction without an expensive high-fidelity simulator or a real vehicle. This work presents SwiVR-Car-Seat, a low-cost swivel seat to simulate vehicle motion using rotation. In an exploratory user study (N=18), participants sat in a virtual autonomous vehicle and performed interaction tasks using the input modalities touch, gesture, gaze, or speech. Results show that the simulation increased the perceived realism of vehicle motion in virtual reality and the feeling of presence. Task performance was not influenced uniformly across modalities;gesture and gaze were negatively affected while there was little impact on touch and speech. The findings can advise automotive user interface designto mitigate the adverse effects of vehicle motion on the interaction.

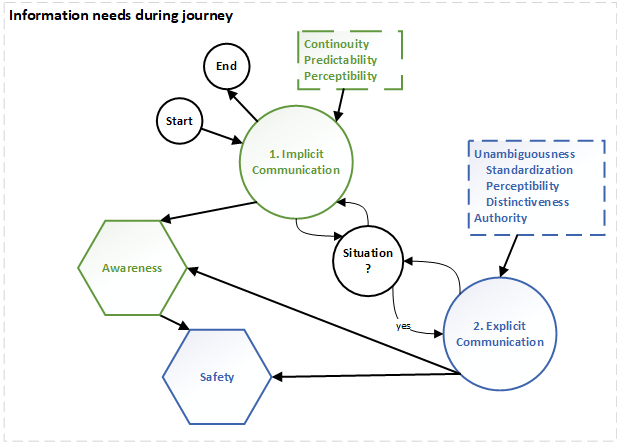

Passengers of automated vehicles will likely engage in non-driving related activities like reading and, therefore, be disengaged from the driving task. However, especially in critical situations such as unexpected pedestrian crossings, it can be assumed that passengers request information about the vehicle's intention and an explanation.

Some concepts were proposed for such communication from the automated vehicle to the passenger. However, results are not comparable due to varying information content and scenarios.

We present a comparative study in Virtual Reality (N=20) of four visualization concepts and a baseline with Augmented Reality, a Head-Up Display, or Lightbands.

We found that all concepts were rated reasonable and necessary and increased trust, perceived safety, perceived intelligence, and acceptance compared to no visualization. However, when visualizations were compared, there were hardly any significant differences between them.

Autonomous vehicles could improve mobility, safety, and inclusion in traffic.

While this technology seems within reach, its successful introduction depends on the intended user's acceptance. A substantial factor for this acceptance is trust in the autonomous vehicle's capabilities. Visualizing internal information processed by an autonomous vehicle could calibrate this trust by enabling the perception of the vehicle's detection capabilities (and its failures) while only inducing a low cognitive load. Additionally, the simultaneously raised situation awareness could benefit potential take-overs.

We report the results of two comparative online studies on visualizing semantic segmentation information for the human user of autonomous vehicles. Effects on trust, cognitive load, and situation awareness were measured using a simulation (N=32) and state-of-the-art panoptic segmentation on a pre-recorded real-world video (N=41).

Results show that the visualization using Augmented Reality increases situation awareness while remaining low cognitive load.

2020

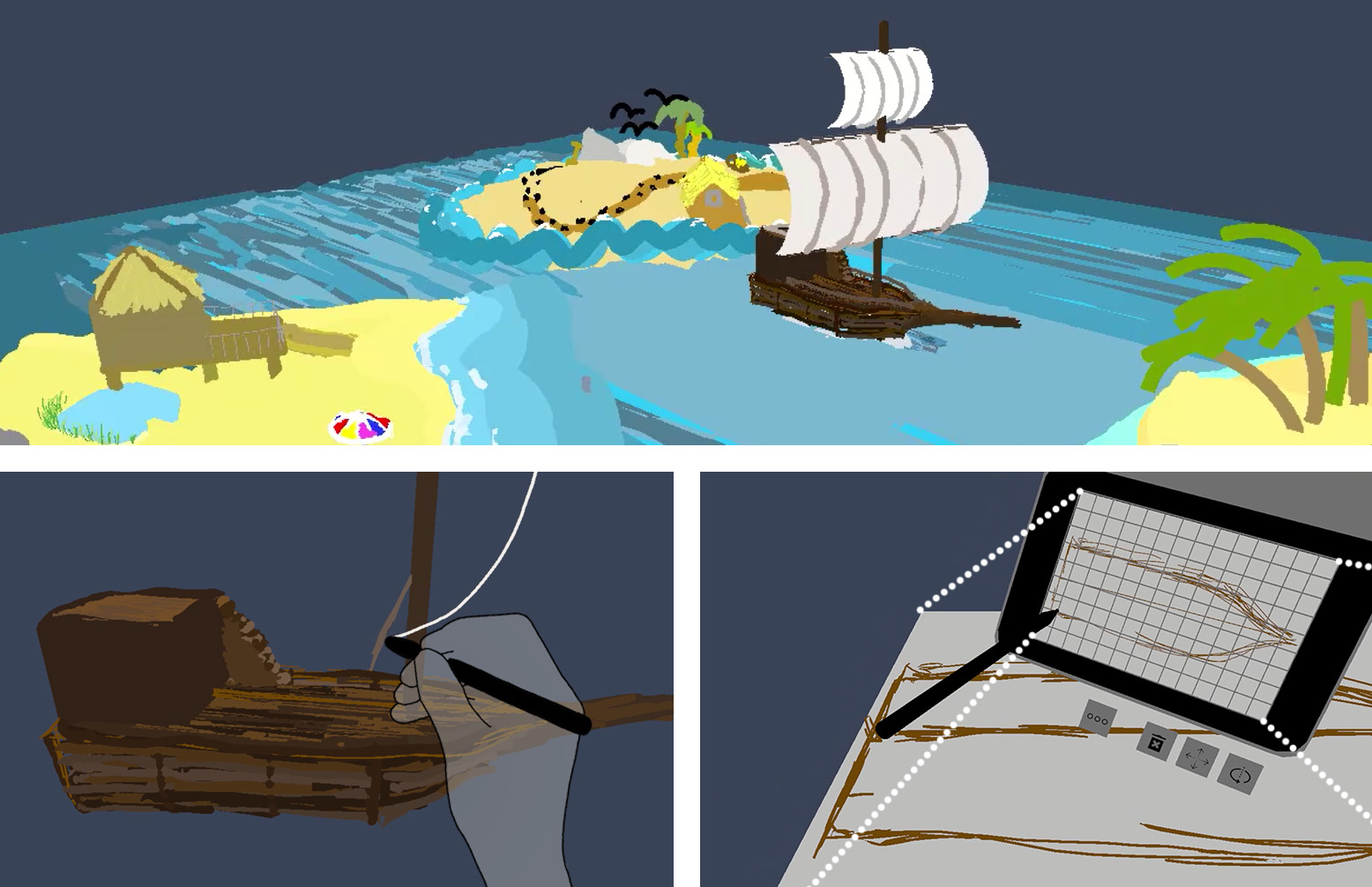

VRSketchIn: Exploring the Design Space of Pen and Tablet Interaction for 3D Sketching in Virtual Reality

Sketching in virtual reality (VR) enhances perception and understanding of 3D volumes, but is currently a challenging task, as spatial input devices (e.g., tracked controllers) do not provide any scaffolding or constraints for mid-air interaction. We present VRSketchIn, a VR sketching application using a 6DoF-tracked pen and a 6DoF-tracked tablet as input devices, combining unconstrained 3D mid-air with constrained 2D surface-based sketching. To explore what possibilities arise from this combination of 2D (pen on tablet) and 3D input (6DoF pen), we present a set of design dimensions and define the design space for 2D and 3D sketching interaction metaphors in VR. ...

Read more...

Mix&Match: Towards Omitting Modelling through In-Situ Alteration and Remixing of Model Repository Artifacts in Mixed Reality

The accessibility of tools to model artifacts is one of the core driving factors for the adoption of Personal Fabrication. Subsequently, model repositories like Thingiverse became important tools in (novice) makers' processes. They allow them to shorten or even omit the design process, offloading a majority of the effort to other parties. However, steps like measurement of surrounding constraints (e.g., clearance) which exist only inside the users' environment, can not be similarly outsourced. We propose Mix&Match a mixed-reality-based system which allows users to browse model repositories, preview the models in-situ, and adapt them to their environment...

Read more...

Towards Inclusive External Communication of Autonomous Vehicles for Pedestrians with Vision Impairments

People with vision impairments (VIP) are among the most vulnerable road users in traffic. Autonomous vehicles are believed to reduce accidents but still demand some form of external communication signaling relevant information to pedestrians. Recent research on the design of vehicle-pedestrian communication (VPC) focuses strongly on concepts for a non-disabled population. Our work presents an inclusive user-centered design for VPC, beneficial for both vision impaired and seeing pedestrians. We conducted a workshop with VIP (N=6), discussing current issues in road traffic and comparing communication concepts proposed by literature...

JumpVR: Jump-Based Locomotion Augmentation for Virtual Reality

One of the great benefits of virtual reality (VR) is the implementation of features that go beyond realism. Common ``unrealistic'' locomotion techniques (like teleportation) can avoid spatial limitation of tracking, but minimize potential benefits of more realistic techniques (e.g. walking). As an alternative that combines realistic physical movement with hyper-realistic virtual outcome, we present JumpVR, a jump-based locomotion augmentation technique that virtually scales users' physical jumps...

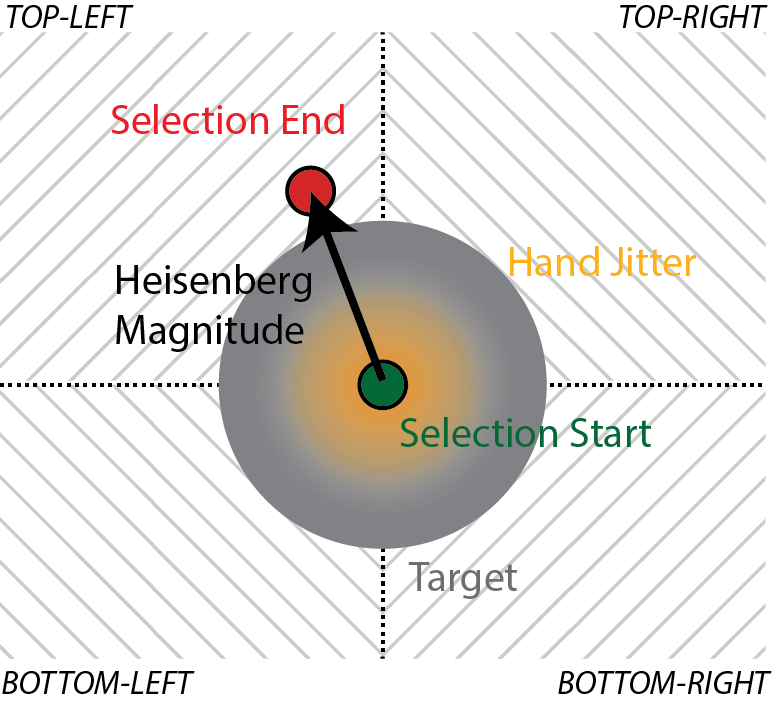

Understanding the Heisenberg Effect of Spatial Interaction: A Selection Induced Error for Spatially Tracked Input Devices

Virtual and augmented reality head-mounted displays (HMDs) are currently heavily relying on spatially tracked input devices (STID) for interaction. These STIDs are all prone to the phenomenon that a discrete input (e.g. button press) will disturb the position of the tracker, resulting in a different selection point during ray-cast interaction (Heisenberg Effect of Spatial Interaction). Besides the knowledge of its existence, there is currently a lack of a deeper understanding of its severity, structure and impact on throughput and angular error during a selection task. In this work...

2019

cARe: An Augmented Reality Support System for Geriatric Inpatients with Mild Cognitive Impairment

Cognitive impairment such as memory loss, an impaired executive function and decreasing motivation can gradually undermine instrumental activities of daily living (IADL). With an older growing population, previous works have explored assistive technologies (ATs)

to automate repetitive components of therapy and thereby increase patients’ autonomy and reduce dependence on carers.

Face/On: Multi-Modal Haptic Feedback for Head-Mounted Displays in Virtual Reality

While the real world provides humans with a huge variety of sensory stimuli, virtual worlds most of all communicate their properties by visual and auditory feedback due to the design of current head mounted displays (HMDs). Since HMDs offer sufficient contact area to integrate additional actuators, prior works utilised a limited amount of haptic actuators to integrate respective information about the virtual world. With the Face/On prototype...

A Design Space for Gaze Interaction on Head-Mounted Displays

Augmented and virtual reality (AR/VR) head-mounted display (HMD) applications inherently rely on three dimensional information. In contrast to gaze interaction on a two dimensional screen, gaze interaction in AR and VR therefore also requires to estimate a user's gaze in 3D (3D Gaze).

While first applications, such as foveated rendering, hint at the compelling potential of combining HMDs and gaze, a systematic analysis is missing. To fill this gap, we present the first design space for gaze interaction on HMDs.

2018

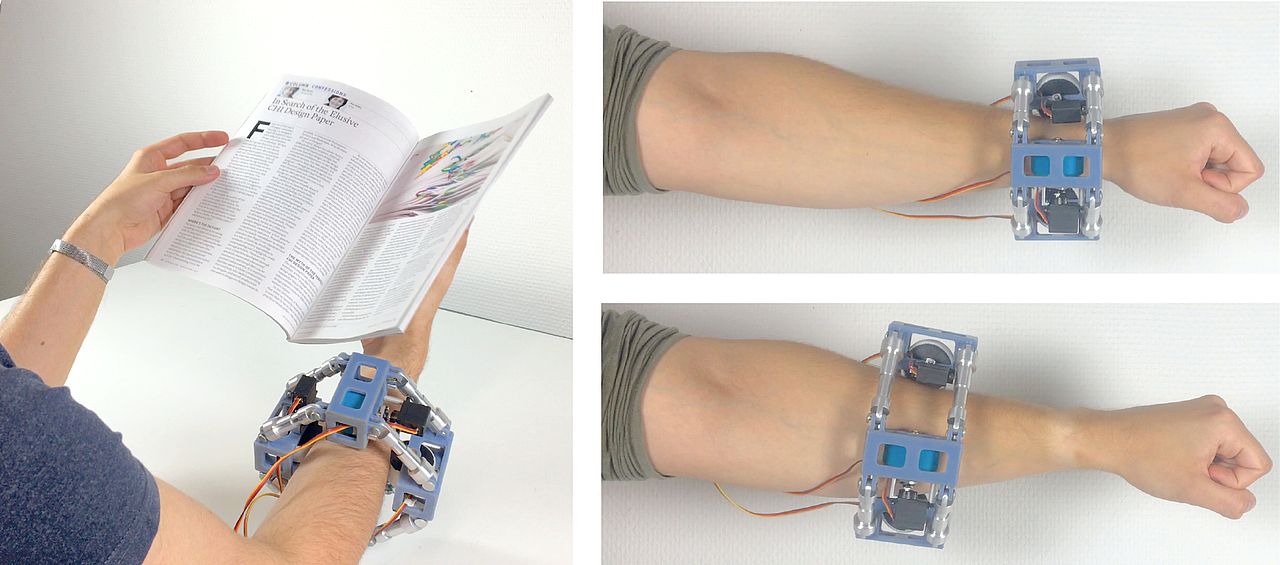

Movelet: A Self-actuated Movable Bracelet for Positional Haptic Feedback on the User's Forearm

We present Movelet, a self-actuated bracelet that can move along the user's forearm to convey feedback via its movement and positioning. In contrast to other eyes-free modalities such as vibro-tactile feedback, that only works momentarily, Movelet is able to provide sustained feedback via its spatial position on the forearm, in addition to momentary feedback by movement. This allows to continuously inform the user about the changing state of information utilizing their haptic perception.

Read more...

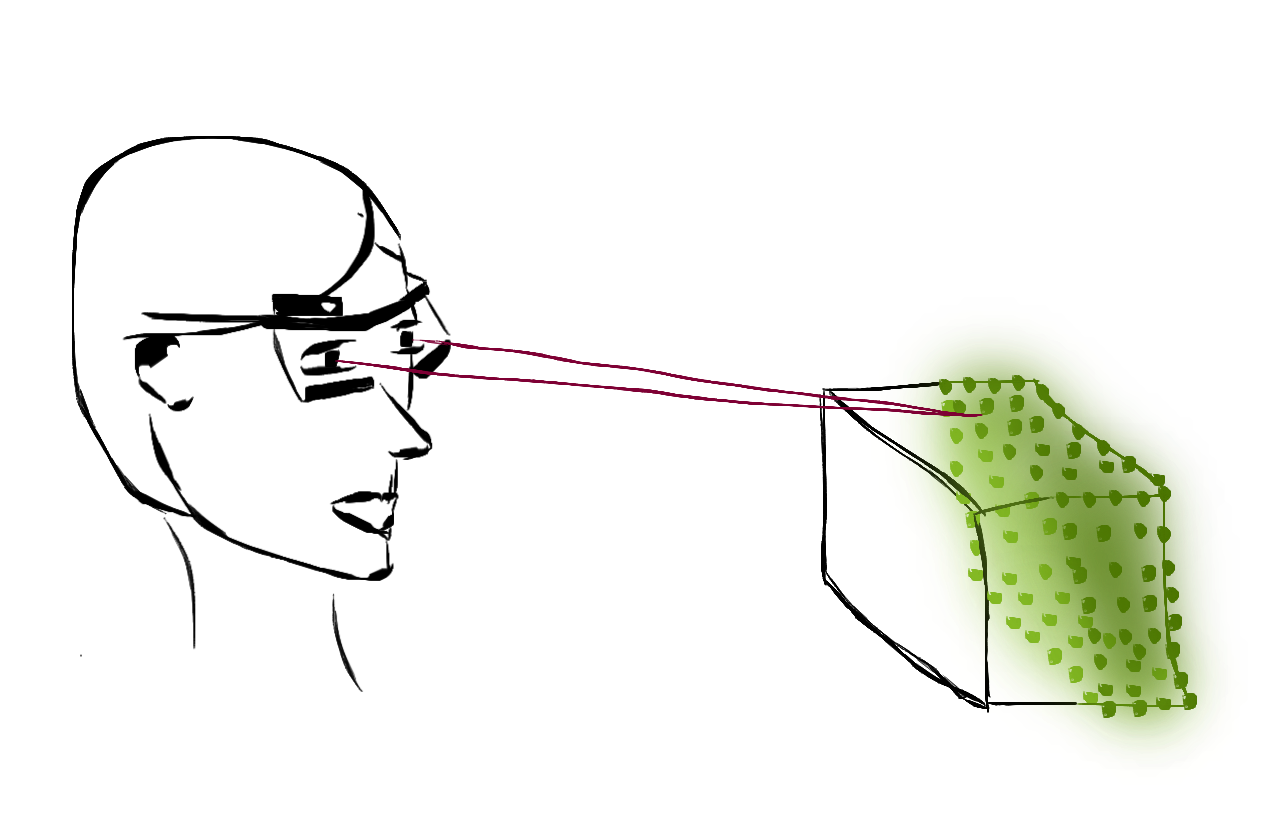

A Symbiotic Human-Machine Depth Sensor

The goal of this project is to explore how much we can learn about physical objects a user is looking at by observing gaze depth. We envision a symbiotic scenario, where current technology (e.g. depth cameras) is extended with "human sensing data". Here, a depth camera is able to create a rough understanding of a static environment and gaze depth is merged into the model by leveraging unique propetries of human vision.

FaceDisplay: Towards Asymmetric Multi-User Interaction for Nomadic Virtual Reality

Mobile VR HMDs enable scenarios where they are being used in public, excluding all the people in the surrounding (Non-HMDUsers) and reducing them to be sole bystanders. We present FaceDisplay, a modified VR HMD consisting of three touch sensitive displays and a depth camera attached to its back. People in the surrounding can perceive the virtual world through the displays and interact with the HMD user via touch or gestures.

Read more...

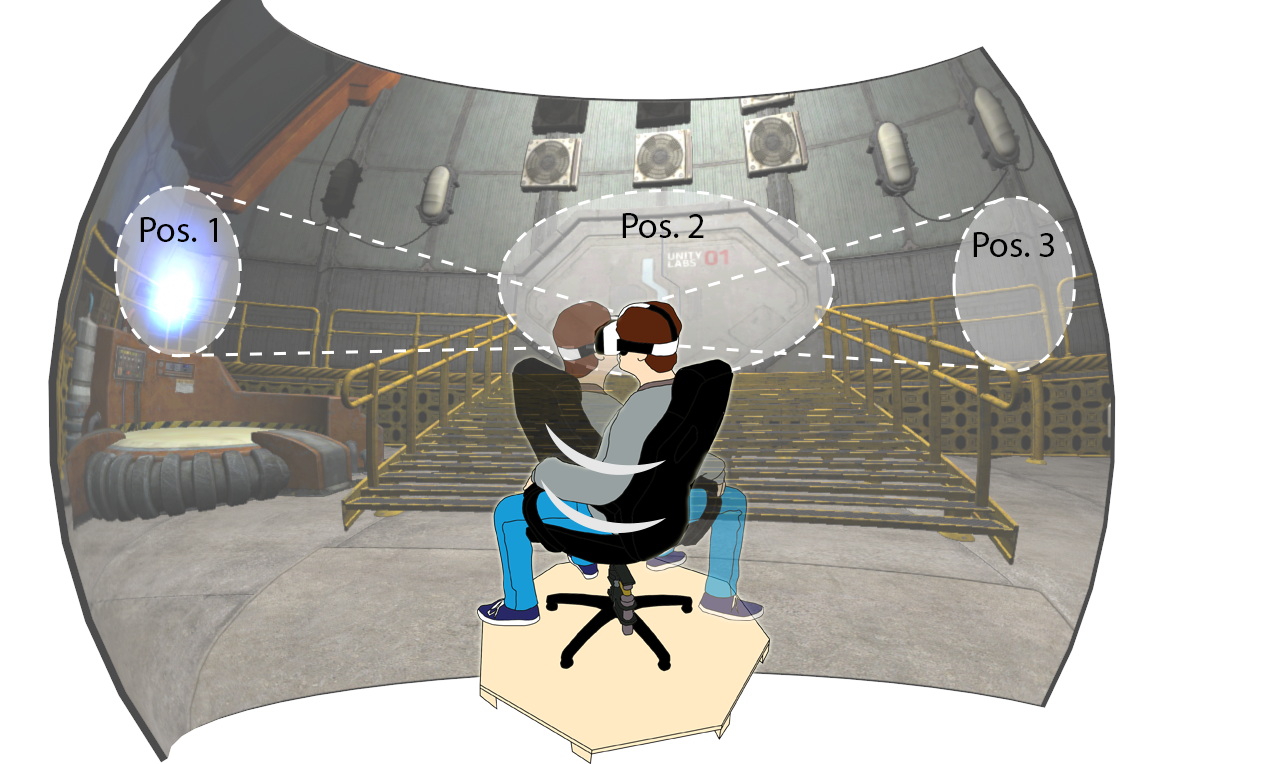

VRSpinning: Exploring the Design Space of a 1D Rotation Platform to Increase the Perception of Self-Motion in VR

We propose VRSpinning, a seated locomotion approach based around stimulating the user's vestibular system using a rotational impulse to induce the perception of linear self-motion.

Read More...

Performance Envelopes of In-Air Direct and Smartwatch Indirect Control for Head-Mounted Augmented Reality

The scarcity of established input methods for augmented reality (AR) head-mounted displays (HMD) motivates us to investigate the performance envelopes of two easily realisable solutions: indirect cursor control via a smartwatch and direct control by in-air touch.

Read more ...

2017

Interaction With Adaptive and Ubiquitous User Interfaces

Current user interfaces such as public displays, smartphones and tablets strive to provide a constant flow of information. Although they all can be regarded as a first step towards Mark Weiser’s vision of ubiquitous computing they are still not able to fully achieve the ubiquity and omnipresence Weiser envisioned.

Read more ...

Improving Input Accuracy on Smartphones for Persons who are Affected by Tremor using Motion Sensors

Having a hand tremor often complicates interactions with touchscreens on mobile devices. Due to the uncontrollable oscillations of both hands, hitting targets can be hard, and interaction can be slow. Correcting input needs additional time and mental effort.

Read more ...

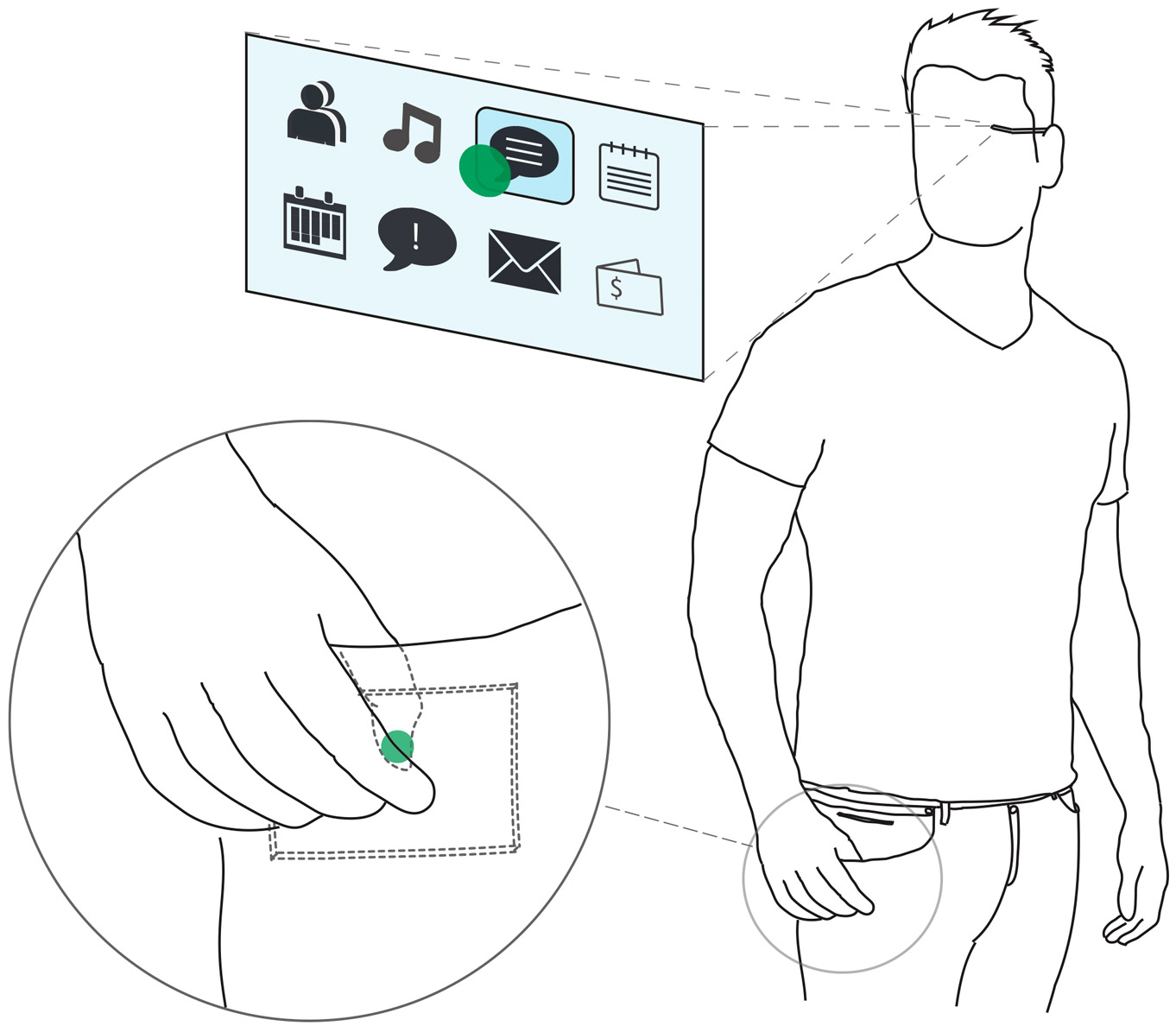

PocketThumb: a Wearable Dual-Sided Touch Interface for Cursor-based Control of Smart-Eyewear

We present PocketThumb, a wearable touch interface for smart-eyewear that is embedded into the fabrics of the front trouser pockets. The interface is reachable from outside and inside of the pocket to allow for a combined dual-sided touch input

Read more ...

We present inScent, a wearable olfactory display that can be worn in mobile everyday situations and allows the user to receive personal scented notifications.

ShareVR: Enabling Co-Located Experiences for Virtual Reality between HMD and Non-HMD Users

ShareVR is a proof-of-concept prototype using floor projection and mobile displays in combination with positional tracking to visualize the virtual world for the Non-HMD user, enabling them to interact with the HMD user and become part of the VR experience.

Read more ...

2016

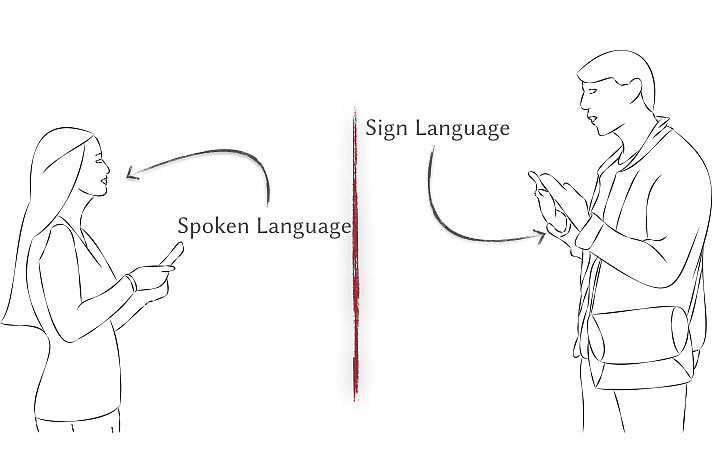

BodySign: Evaluating the Impact of Assistive Technology on Communication Quality Between Deaf and Hearing Individuals

Deaf individuals often experience communication difficulties in face-to-face interactions with hearing people. We investigate the impact of real-time translation-based ATs on communication quality between deaf and hearing individuals.

Read more ...

CarVR: Enabling In-Car Virtual Reality Entertainment

CarVR enables virtual reality entertainmen in moving vehicles. We enhance the VR experience by matching kinesthetic forces of the car movements to the VR experience.

Read more ...

Carvatar

Carvatar was built to increase trust in automation through social cues. The prototype can imitate human behavior, such as a humanoid gaze behavior or by calling attention to specific situations. The avatar can be used to establish a cooperative communication between driver and vehicle.

Read more ...

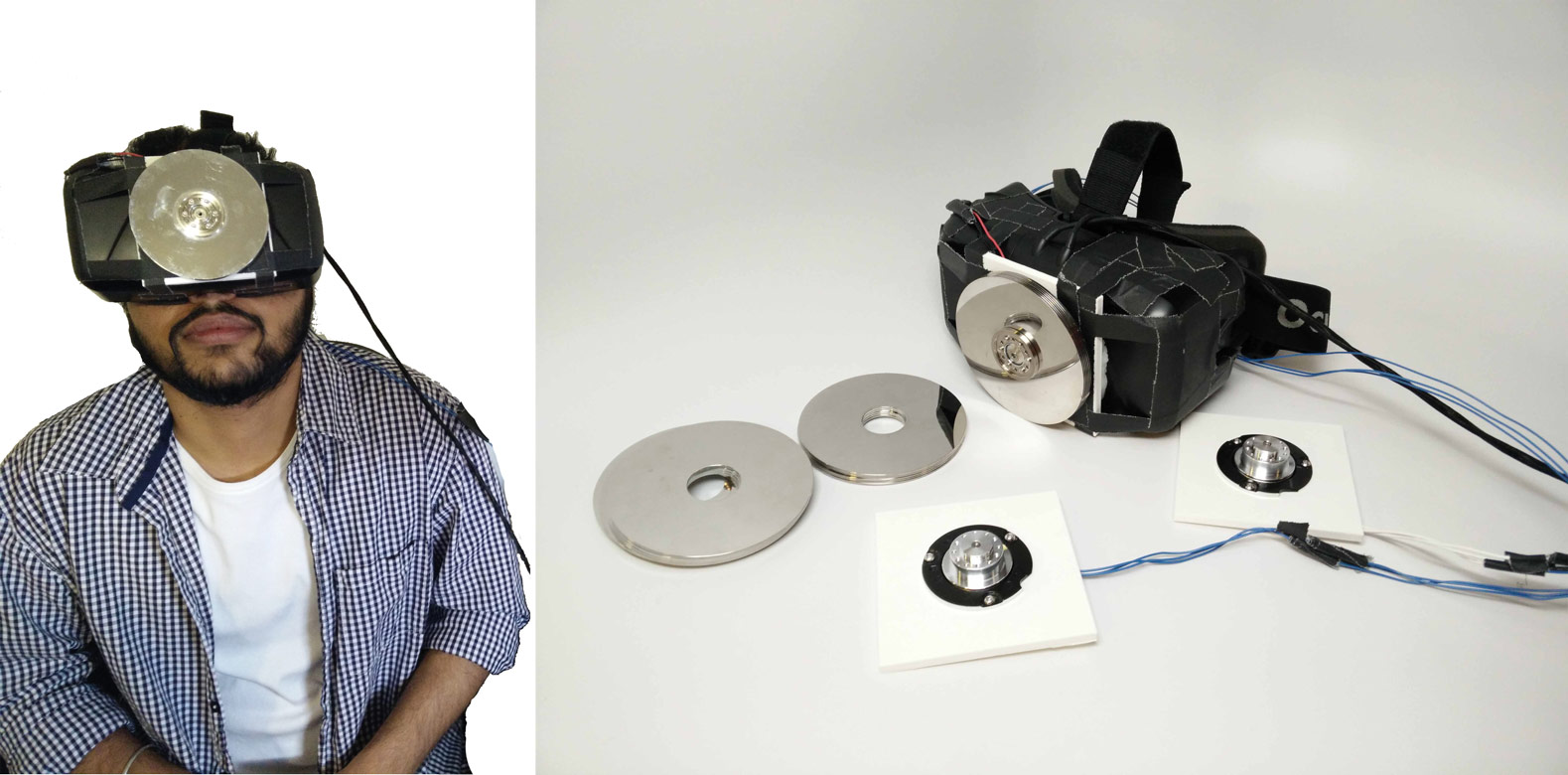

GyroVR: Simulating Inertia in Virtual Reality using Head Worn Flywheels

GyroVR uses head worn flywheels designed to render inertia in Virtual Reality (VR). Motions such as flying, diving or floating in outer space generate kinesthetic forces onto our body which impede movement and are currently not represented in VR. GyroVR simulates those kinesthetic forces by attaching flywheels to the users head which leverage the gyroscopic effect of resistance when changing the spinning axis of rotation. GyroVR is an ungrounded, wireless and self contained device allowing the user to freely move inside the virtual environment...

Read more ...

CircularSelection: Optimizing List Selection for Smartwatches

As the availability of smartwatches advances, small round touch- screens are increasingly used. Until recently, round touchscreens were rather uncommon, and so most current user interfaces for small round touchscreens are still based on rectangular interface designs. Adjusting these standard rectangular interfaces to round touchscreens without loosing content comes with loss of pre- cious display space. To overcome this issue for list interfaces, we introduce CircularSelection. CircularSelection is a list selec- tion interface especially designed for small round touchscreens.

Read more ...

FusionKit: Multi-Kinect fusion for markerless and marker-based tracking in HCI

FusionKit is a software suite developed in the course of the the research group's participation in the INTERACT project. It aims to be a low-cost tracking system based on multiple Kinect time-of-flight cameras, which enables markerless body tracking as well as marker-based object tracking for a broad range of HCI and tracking scenarios. The toolkit is to be released as fully open source software and can be used and modified by industry, researchers and other groups interested in an easy-to-setup, affordable tracking solution.

Read more ...

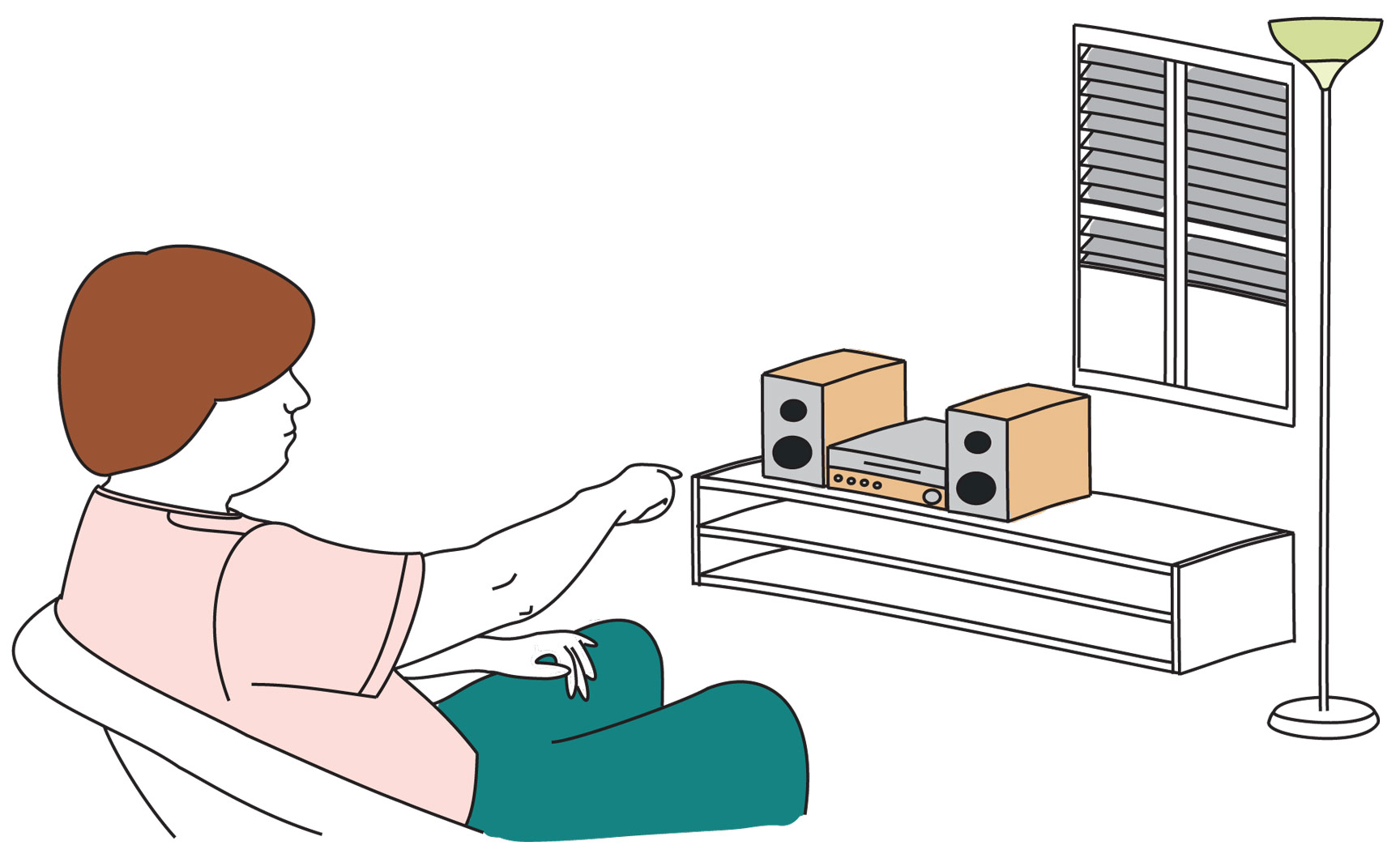

Solving Conflicts for Multi User Mid-Air Gestures for TVs

In recent years, mid-air gestures have become a feasible input modality for controlling and manipulating digital content. In case of controlling TVs, mid-air gestures eliminate the need to hold remote controls, which quite often are not at hand or even need to be searched before use. Thus, mid-air gestures quicken interactions. However, the absence of a single controller and the nature of mid-air gesture detection also poses a disadvantage: gestures preformed by multiple watchers may result in conflicts. In this paper, we propose an interaction technique solving the conflicts arising in such multi viewer scenarios.

Read more...

SwiVRChair: A Motorized Swivel Chair to Nudge Users’ Orientation for 360 Degree Storytelling

Since 360 degree movies are a fairly new medium, creators are facing several challenges such as controlling the attention of a user. In traditional movies this is done by applying cuts and tracking shots which is not possible or advisable in VR since rotating the virtual scene in front of the user’s eyes will lead to simulator sickness. One of the reasons this effect occurs is when the physical movement (measured by the vestibular system) and the visual movement are not coherent.

Read more ...

We present FaceTouch, a mobile Virtual Reality (VR) headmounted display (HMD) that leverages the backside as a touch-sensitive surface. FaceTouch allows the user to point at and select virtual content inside their field-of-view by touching the corresponding location at the backside of the HMD utilizing their sense of proprioception. This allows for a rich interaction (e.g. gestures) in mobile and nomadic scenarios without having to carry additional accessories (e.g. gamepad). We built a prototype of FaceTouch and present interaction techniques and three example applications that leverage the FaceTouch design space.

Read more...

2015

Towards Accurate Cursorless Pointing: The Effects of Ocular Dominance and Handedness

Pointing gestures are our natural way of referencing distant objects and thus widely used in HCI for controlling devices. Due to current pointing models' inherent inaccuracies, most of the systems using pointing gestures so far rely on visual feedback showing users where they point at. However, in many environments, e.g. smart homes, it is rarely possible to display cursors since most devices do not contain a display.

Read more...

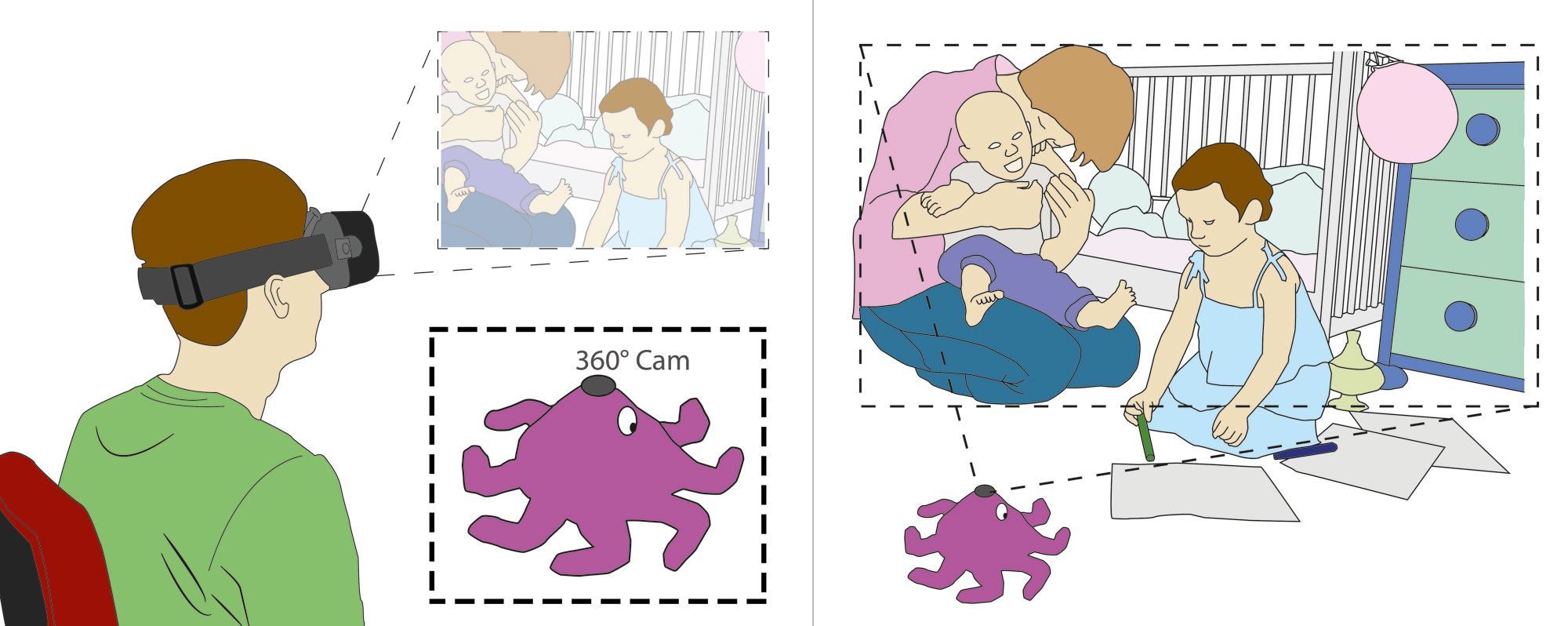

OctiCam: An immersive and mobile video communication device for parents and children

OctiCam is a mobile and child-friendly device that consists of a stuff ed toy octopus on the outside and a communication proxy on the inside. Relying only on two squeeze buttons in the tentacles, we simpli ed the interaction with OctiCam to a child-friendly level. A build-in microphone and speaker allows audio chats while a build-in camera streams a 360 degree video using a fi sh-eye lens.

Read more ...

How Companion-Technology can Enhance a Multi-Screen Television Experience: A Test Bed for Adaptive Multimodal Interaction in Domestic Environments

This project deals with a novel multi-screen interactive TV setup (smarTVision) and its enhancement through Companion-Technology. Due to their flexibility and the variety of interaction options, such multi-screen scenarios are hardly intuitive for the user. While research known so far focuses on technology and features, the user itself is often not considered adequately. Companion-Technology has the potential of making such interfaces really user-friendly. Building upon smarTVision, it’s extension via concepts of Companion-Technology is envisioned. This combination represents a versatile test bed that not only can be used for evaluating usefulness of Companion-Technology in a TV scenario, but can also serve to evaluate Companion-Systems in general.

Read more ...

ColorSnakes: Using Colored Decoys to Secure Authentication in Sensitive Contexts

ColorSnakes is an authentication mechanism based solely on software modification which provides protection against shoulder surfing and to some degree to video attacks. A ColorSnakes PIN consists of a starting colored digit and is followed by four consecutive digits. From the starting colored digit, users indirectly draw a path (selection path) consisting of their PIN. The input path can be drawn anywhere on the grid.

Read more ...

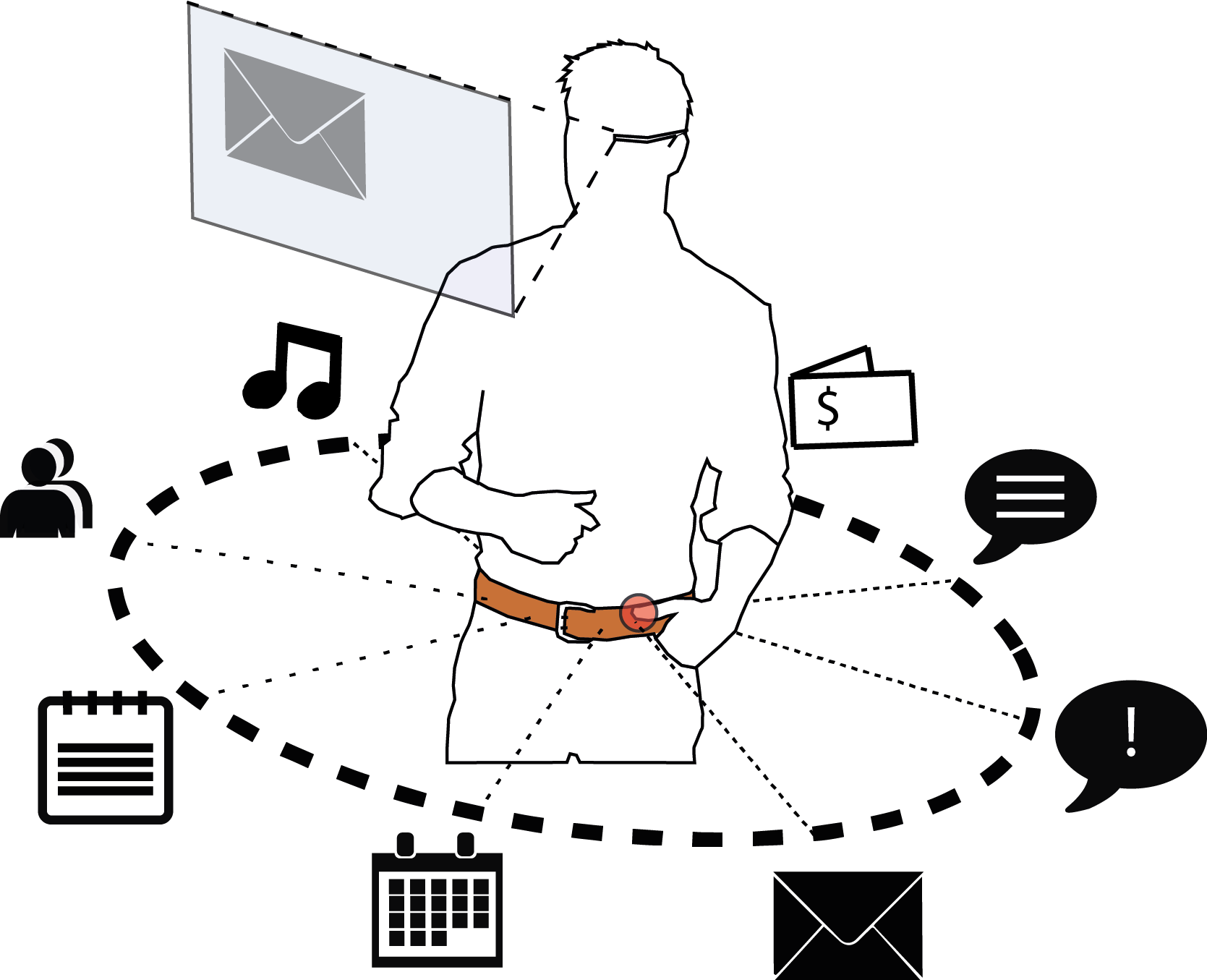

Belt: An Unobtrusive Touch Input Device for Head-worn Displays

Belt is a novel unobtrusive input device for wearable displays that incorporates a touch surface encircling the user’s hip. The wide input space is leveraged for a horizontal spatial mapping of quickly accessible information and applications. We discuss social implications and interaction capabilities for unobtrusive touch input and present our hardware implementation and a set of applications that benefit from the quick access time.

Read more ...

Glass Unlock: Enhancing Security of Smartphone Unlocking through Leveraging a Private Near-eye Display

Glass Unlock is a novel concept using smart glasses for smartphone unlocking, which is theoretically secure against smudge attacks, shoulder-surfing, and camera attacks. By introducing an additional temporary secret like the layout of digits that is only shown on the private near-eye display, attackers cannot make sense of the observed input on the almost empty phone screen.

Read more ...

2014

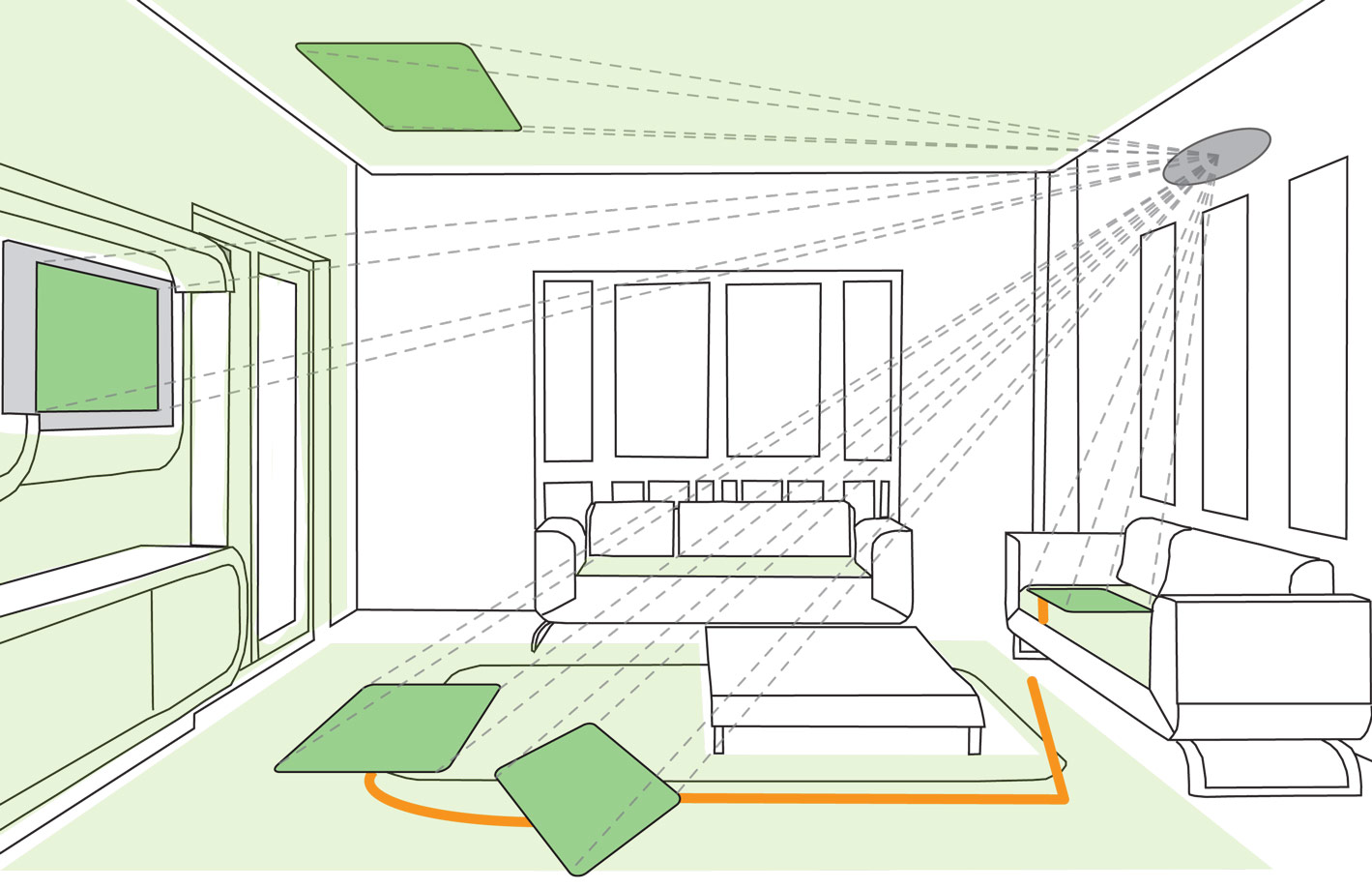

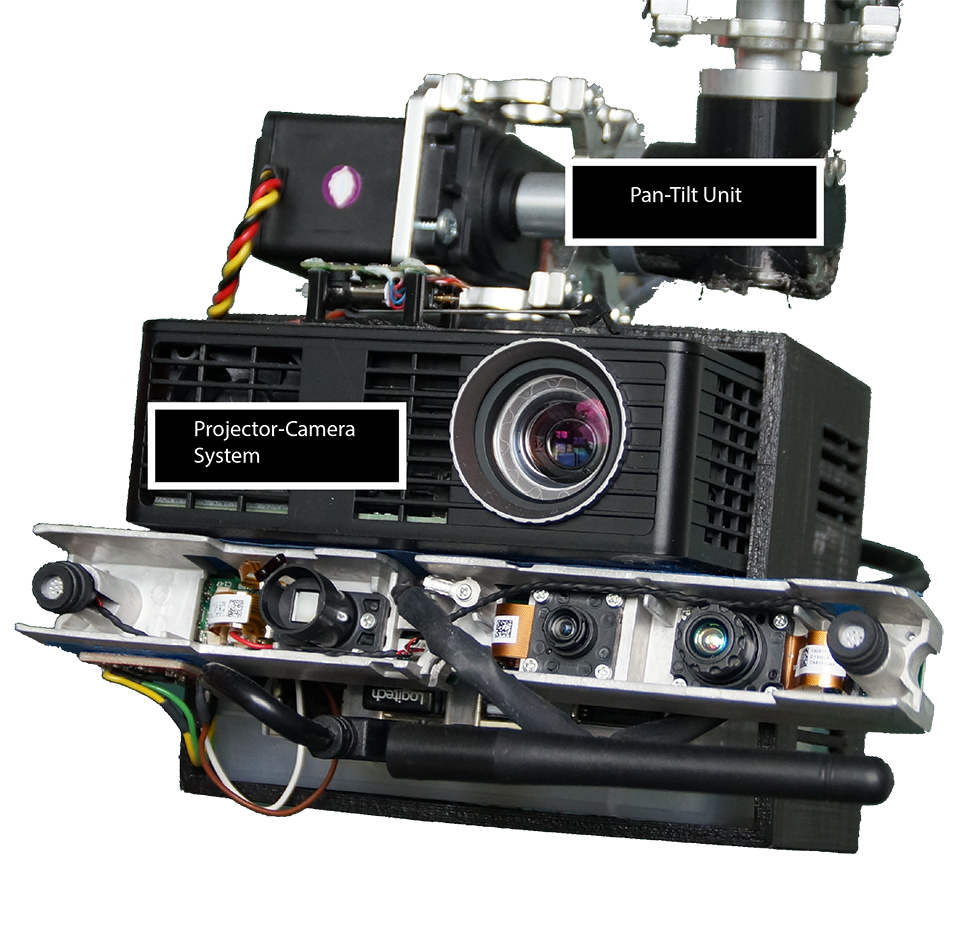

UbiBeam: An Interactive Projector-Camera System for Domestic Deployment

We conducted an in-situ user study by visiting 22 households and exploring specific use cases and ideas of portable projector-camera systems in a domestic environment. Using a grounded theory approach, we identified several categories such as interaction techniques, presentation space, placement and use cases. Based on our observations, we designed and implement UbiBeam, a domestically deployable projector-camera system.

Read more...

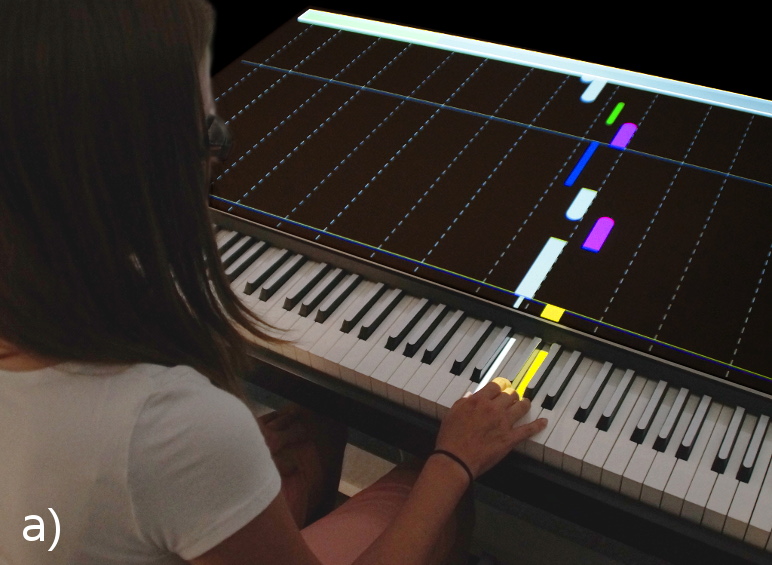

P.I.A.N.O.: Faster Piano Learning with Interactive Projection

We designed P.I.A.N.O., a piano learning system with interactive projection that facilitates a fast learning process. Note information in form of an enhanced piano roll notation is directly projected onto the instrument and allows mapping of notes to piano keys without prior sight-reading skills. Three learning modes support the natural learning process with live feedback and performance evaluation. P.I.A.N.O. supports faster learning, requires significantly less cognitive load, provides better user experience, and increases perceived musical quality compared to sheet music notation and non-projected piano roll notation.

Read more...

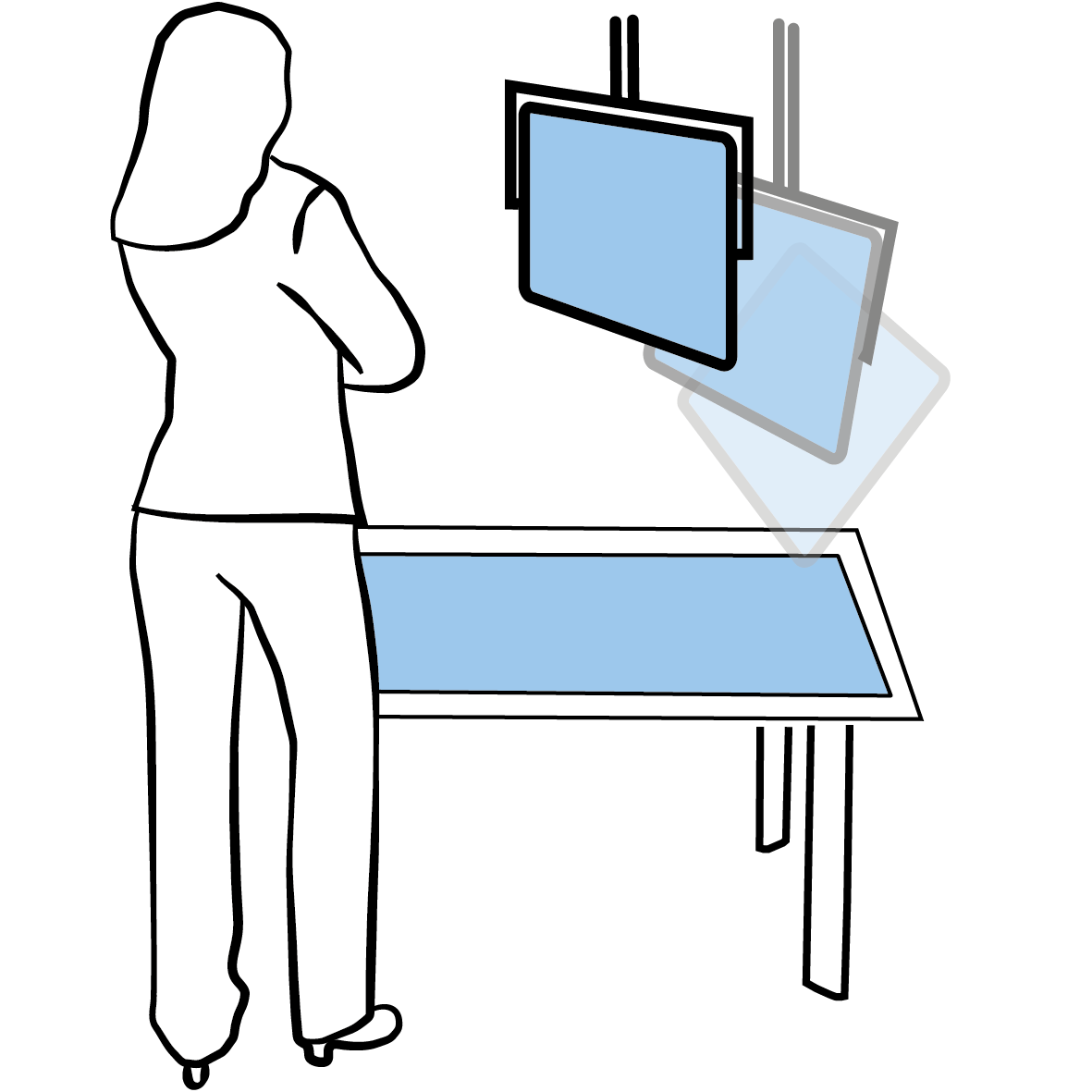

Hover Pad: Interacting with Autonomous and Self-Actuated Displays in Space

In this work, we investigate the use of autonomous, self-actuated displays that can freely move and hold their position and orientation in space without the need for users holding them at all times. We illustrate various stages of such a display’s autonomy ranging from manual to fully autonomous, which – depending on the tasks – facilitate the interaction. Further, we discuss possible motion control mechanisms for these displays and present several interaction techniques made possible by such displays. We designed a toolkit – Hover Pad – that enables exploring five degrees of freedom of self-actuated and autonomous displays and the developed control and interaction techniques.

Read more...

ASSIST: Blick- und Gestenbasierte Assistenzsysteme für Nutzer mit Bewegungseinschränkungen

Eine Vielzahl von Menschen lebt aus Alters- oder Gesundheitsgründen mit variierenden Einschränkungen hinsichtlich ihrer Motorik und ihrer Mobilität. Sie können viele Aufgaben in ihren Wohnungen nicht mehr selbstständig erledigen oder sind auf Hilfe Anderer angewiesen. Das Ziel von ASSIST ist es, hier Abhilfe zu schaffen. Im Zuge des Projekts wird erforscht, inwieweit Funktionen in intelligenten Wohnräumen mit Gesten und Blicken ausgewählt und gesteuert werden können.

Mehr erfahren ...

Pervasive Information through Constant Personal Projection: The Ambient Mobile Pervasive Display (AMP-D)

We introduce the concept of an Ambient Mobile Pervasive Display (AMP-D) which is a wearable projector system that constantly projects an ambient information dis- play in front of the user. The floor display provides serendipitous access to public and personal information. The display is combined with a projected display on the user’s hand, forming a continuous interaction space that is controlled by hand gestures. The AMP-D prototype illustrates the involved challenges concerning hardware, sensing, and visualization and shows several application examples.

Read more...

SurfacePhone: A Mobile Projection Device for Single- and Multiuser Everywhere Tabletop Interaction

In this work we present SurfacePhone; a novel configuration of a projector phone which aligns the projector to project onto a physical surface to allow ad-hoc tabletop-like interaction in a mobile setup. The projection is created behind the upright standing phone and is touch and gesture-enabled. Multiple projections can be merged to create shared spaces for multi-user collaboration.

Read more ...

Broken Display = Broken Interface? The Impact of Display Damage on Smartphone Interaction

This work is the first to assess the impact of touchscreen damage on smartphone interaction. We gathered a dataset consisting of 95 closeup images of damaged smartphones and extensive information about a device’s usage history, damage severity, and impact on use. Further interviews revealed that users adapt to damage with diverse coping strategies, closely tailored to specific interaction issues. Based on our results, we proposed guidelines for interaction design in order to provide a positive user experience when display damage occurs.

Read more...

2013

Penbook: Bringing Pen+Paper Interaction to a Tablet Device to Facilitate Paper-Based Workflows in the Hospital Domain

In many contexts, pen and paper are the ideal option for collecting information despite the pervasiveness of mobile devices. Reasons include the unconstrained nature of sketching or handwriting, as well as the tactility of moving a pen over a paper that supports very fine granular control of the pen. In particular in the context of hospitals, many writing and note taking tasks are still performed using pen and paper. This work presents the Penbook concept, detail specific applications in a hospital context, and present a prototype implementation of Penbook.

Read more ...

From the Private Into the Public

Interactive horizontal surfaces provide large semi-public or public displays for co-located collaboration. In many cases users want to show, discuss, and copy personal information or media, which are typically stored on their mobile phones, on such a surface. This paper presents three novel direct interaction techniques (Select&Place2Share, Select&Touch2Share, and Shield&Share) that allow users to select in private which information they want to share on the surface. All techniques are based on physical contact between mobile phone and surface. Users touch the surface with their phone or place it on the surface to determine the location for information or media to be shared. We compared these three techniques with the most frequently reported approach that immediately shows all media files on the table after placing the phone on a shared surface.

Read more...

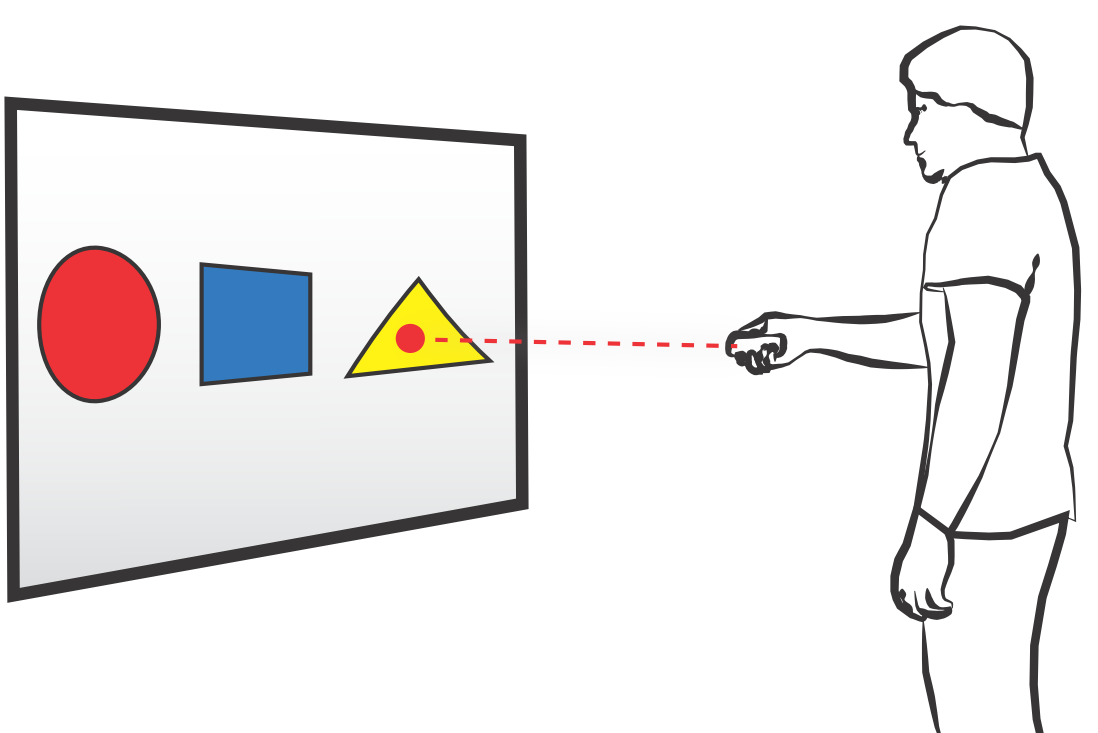

PointerPhone

We present the concept and design space of PointerPhone which enables users to directly point at objects on a remote screen with their mobile phone and interact with them in a natural and seamless way. We detail the design space and distinguish three categories of interactions including low-level interactions using the mobile phone as a precise and fast pointing device, as well as an input and output device. We detail the category of widgetlevel interactions. Further, we demonstrate versatile high-level interaction techniques and show their application in a collaborative presentation scenario. Based on the results of a qualitative study, we provide design implications for application designs.

Read more...

Extending Mobile Interfaces Using External Screens

We present an approach which allows users to establish an ad-hoc connection between their mobile devices and external displays by holding the phone on the border of an external screen in order to temporarily extend the mobile user interface across the mobile and the external screen. This allows users to take advantage of existing large displays in their environments through spanning the mobile application user interface across multiple displays which allows to display more information at once.

Read more...

2012

MobiSurf: Improving Co-located Collaboration through Integrating Mobile Devices and Interactive Surfaces

In this work, we investigated how the combination of personal devices and a simple way of exchanging information between these and an interactive surface changes the way people solve collaborative tasks compared to an existing approach of using personal devices. Our study results clearly indicate that the combination of personal and a shared device allows users to fluently switch between individual and group work phases and users take advantage of both device classes.

Read more...

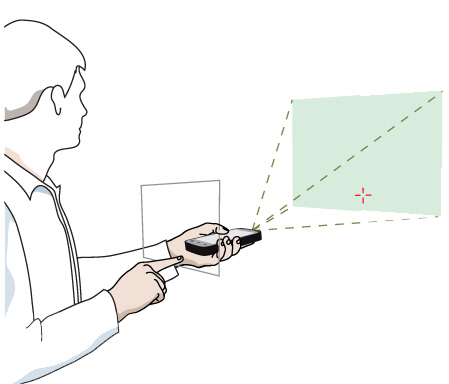

Investigating Mid-Air Pointing Interaction for Projector Phones

Projector phones, mobile phones with built-in projectors, might significantly change the way we are going to use and interact with mobile phones. This project explores the potential of combining the mobile and the projected display and further the potential of the mid-air space between them for the first time. Results from two studies with several gesture pointing techniques indicate that interacting behind the phone yields the highest performance, albeit showing a twice as high error rate. Further they show that mobile applications benefit from the projection, e.g., by overcoming the fat-finger problem on touchscreens and increasing the visibility of small objects.

Read more...

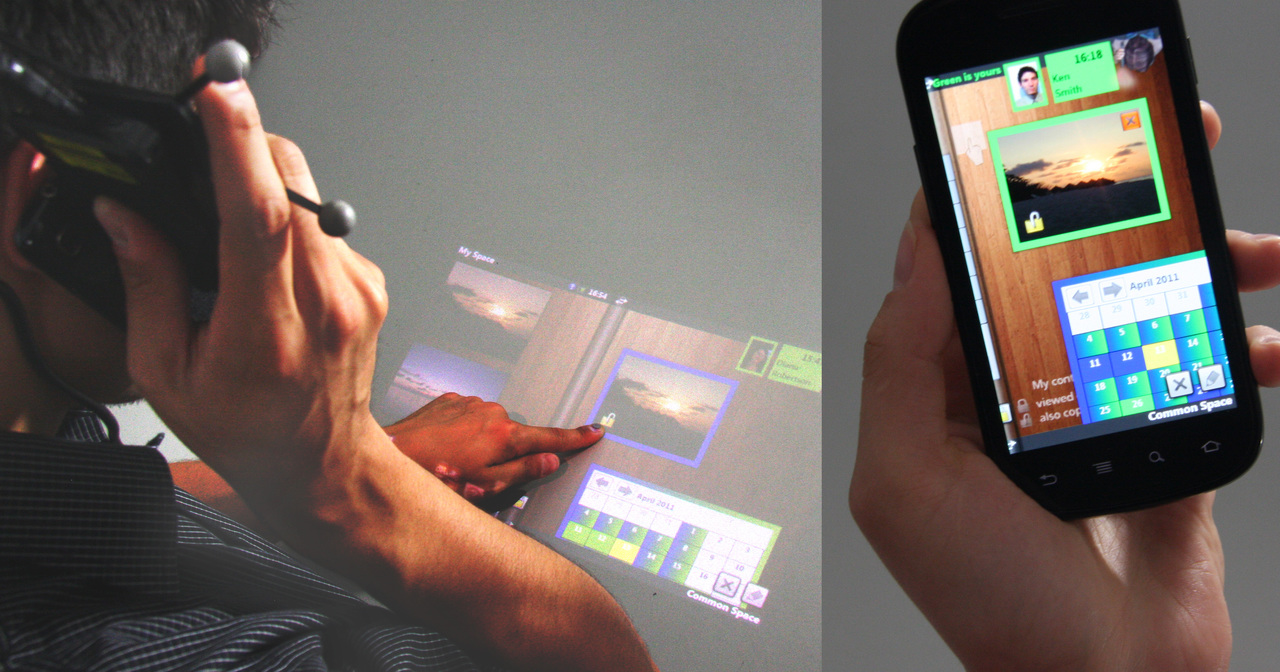

A Cross Device Interaction Style

Natural forms of interaction have evolved for personal devices that we carry with us (mobiles) as well as for shared interactive displays around us (surfaces) but interaction across the two remains cumbersome in practice. We propose a novel cross device interaction style for mobiles and surfaces that uses the mobile for tangible input on the surface in a stylus-like fashion. Building on the direct manipulation that we can perform on either device, it facilitates fluid and seamless interaction spanning across device boundaries. We provide a characterization of the combined interaction style in terms of input, output, and contextual attributes, and demonstrate its versatility by implementation of a range of novel interaction techniques for mobile devices on interactive surfaces.

Read more...

Don't Queue Up! User Attitudes Towards Mobile Interactions with Public Terminals.

Public terminals for service provision provide high convenience to users due to their constant availability. Yet, the interaction with them lacks security and privacy as it takes place in a public setting. Additionally, users have to wait in line until they can interact with the terminal. In comparison to that, personal mobile devices allow for private service execution. Since many services, like with-drawing money from an ATM, require physical presence at the terminal, hybrid approaches have been developed. These move parts of the interaction to a mobile device. In this work we present the results of a four week long real world user study, in which we investigated whether hybrid approaches would actually be used.

Read more...

2011

Interactive Phone Call

Smartphones provide large amounts of personal data, functionalities, but during phone calls the phone cannot be used much beyond voice communication and does not offer support for synchronous collaboration. This is owed to the fact that first, despite the availability of alternatives, the phone is typically held at one’s ear; and second that the small mobile screen is less suited to be used with existing collaboration software. This work presents a novel in-call collaboration system that leverages projector phones as they provide a large display that can be used while holding the phone to the ear to project an interactive interface anytime and anywhere.

Read more...